In the late 2000s, Netflix faced a critical challenge. With millions of users watching movies on demand, their legacy data couldn’t handle growing demand, leading to outages and slow deployments. Every new feature release felt like a gamble that could bring the system down.

Netflix had two choices:

- Keep patching a crumbling infrastructure

- Or rebuild from the ground up with cloud-native architecture.

And the happy part is that they chose to rebuild.

By migrating its operations to Amazon Web Services (AWS), Netflix transitioned from monolithic applications to a microservices-based architecture. This shift enabled them to deploy thousands of servers and terabytes of storage within minutes, ensuring seamless global service delivery.

If you’re a C-suite executive or enterprise leader, you’re likely grappling with similar challenges:

- Slow time-to-market due to monolithic systems

- High operational costs from maintaining legacy infrastructure

- Scalability bottlenecks

- Downtime that threatens customer experience

In this blog, we’ll break down the key cloud native architecture principles and best practices that drive success, so you can:

- Accelerate software development (SDLC)

- Improve reliability

- Scale effortlessly with demand

- Future-proof your infrastructure

The question isn’t whether to go cloud-native, it’s how to do it right. Let’s dive in.

Core Cloud Native Architecture Principles

Adopting cloud-native architecture isn’t just about shifting workloads to the cloud, it’s about rethinking how software is built, deployed, and scaled. Enterprises that get it right achieve agility, resilience, and innovation. Those that don’t risk turning the cloud into an expensive, underutilized data center.

So, where do you begin? Start with the principles of cloud native architecture that matter, and you will end up leveraging its benefits.

1. Design for Scalability

Your application should be able to handle sudden spikes in traffic without experiencing any issues. That means using auto-scaling, load balancing, and decoupled services to distribute the load efficiently.

Cloud-native solutions scale up or down based on demand. Whether it’s a viral campaign or an unexpected surge, a well-architected system ensures your app remains responsive and resilient.

The perfect example is Netflix, which streams over 6 billion hours of content to 220+ million users. Their cloud-native infrastructure allows them to scale services in real-time, based on regional or global demand.

2. Embrace Microservices for Modularity

Microservices break down large applications into smaller, independent services—each with its own logic, database, and deployment cycle. This allows faster development, easier testing, and failure isolation.

Example: Amazon restructured its monolithic architecture in the early 2000s into microservices, enabling independent deployments and reducing the risk of system-wide outages.

Explore how microservices compare to monolithic architecture in real-world enterprise use cases.

3. Use Containers & Kubernetes to Simplify Delivery

Containers package your applications and dependencies into lightweight, portable units. They isolate services using Linux namespaces, making them secure, efficient, and quick to spin up.

Unlike VMs, containers share the host OS but remain independent. They’re ideal for scaling and portability.

But managing hundreds or thousands of containers manually? That’s where Kubernetes comes in. It automates deployment, scaling, health checks, and more, across multi-cloud or hybrid environments.

Looking to scale with Kubernetes? Start with a solid enterprise strategy.

4. Automate Everything with DevOps & CI/CD

Cloud-native is as much about how you build software as where it runs. Manual deployments and multi-level approvals slow you down.

With CI/CD pipelines, enterprises can roll out updates multiple times a day, just like Amazon and Google do. DevOps automation ensures rapid innovation without compromising production stability.

5. Build Resilience Through Distributed Systems

In the past, a single server crash could result in hours of downtime. Cloud-native applications are designed to anticipate and recover from failures. So, plan for it, using practices such as circuit breakers, retries, timeouts, and fallbacks.

Example: Amazon uses chaos engineering to intentionally break systems in production, testing resilience and ensuring recovery is baked in.

When done right, your users never notice a failure. That’s the real test of resilience.

6. Prioritize Observability & Monitoring

Traditional monitoring only tells you when something breaks. Observability helps you understand why.

By combining logs, metrics, and traces, teams gain full visibility into system health and performance. Tools like Prometheus, Grafana, and OpenTelemetry provide you the insights needed to detect anomalies early and resolve issues before users are impacted.

Together, these principles lay the foundation for resilient, scalable, and efficient cloud-native systems. But turning principles into practice requires a deliberate approach, especially when you’re scaling across the enterprise. That’s where best practices come in. Let’s explore how to operate these principles for real-world cloud-native success effectively.

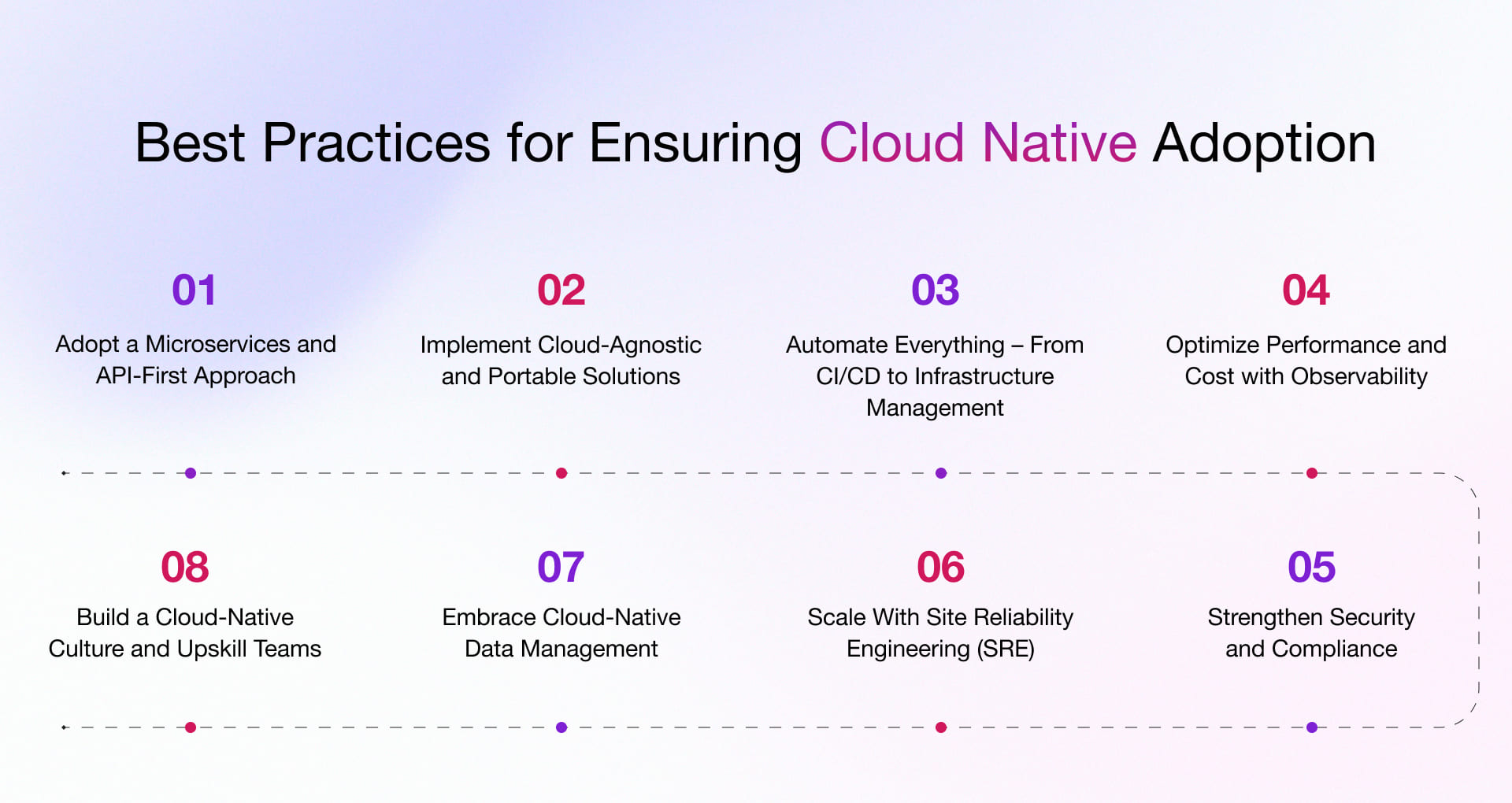

Best Practices for Cloud-Native Development

Moving to cloud-native is a game-changer, but scaling it successfully is where most enterprises struggle. What works for a pilot project may not be effective when implemented on an enterprise-wide scale. It might lead to cost overruns, performance bottlenecks, and security risks. Follow these cloud native development best practices to avoid these pitfalls.

1. Adopt a Microservices and API-First Approach

Modularity is the backbone of scale. Microservices allow you to independently develop, deploy, and scale components without taking the entire system down.

- Decouple Services – Build loosely coupled microservices that can be scaled independently rather than relying on monolithic applications.

- API-First Design – Use APIs as the primary mode of communication between services for seamless integration and extensibility.

- Event-Driven Architecture – Implement event-based communication patterns to facilitate real-time data flow and enhance responsiveness.

- Versioning and Documentation – Maintain explicit API versioning and documentation to prevent breaking changes during scaling.

2. Implement Cloud-Agnostic and Portable Solutions

Avoid vendor lock-in and ensure applications can run seamlessly across multiple cloud providers.

- Use Kubernetes for Orchestration – Standardize deployment across cloud providers using Kubernetes for container orchestration.

- Abstract Cloud Dependencies – Utilize Infrastructure-as-Code (IaC) tools, such as Terraform or Pulumi, for enhanced portability.

- Leverage Multi-Cloud and Hybrid Cloud – Design applications that can operate across different cloud environments without major modifications.

Read more on how cloud-native differs from cloud-agnostic architecture—and when to use which.

3. Automate Everything – From CI/CD to Infrastructure Management

Automation reduces human errors, speeds up deployments, and ensures consistency in scaling operations.

-

- Continuous Integration & Continuous Deployment (CI/CD) – Automate code testing, integration, and deployment to accelerate releases.

Read our case study to discover how we helped a DOOH company optimize its DevOps implementation and CI/CD automation.

- Infrastructure as Code (IaC) – Define and manage cloud infrastructure using declarative tools like Terraform, AWS CloudFormation, or Azure Bicep.

- Policy-as-Code – Automate compliance enforcement by embedding security and governance policies in code.

- Self-Healing Infrastructure – Utilize auto-scaling and self-healing mechanisms to detect and automatically remediate failures.

4. Optimize Performance and Cost with Observability

Scaling effectively requires real-time monitoring and optimization to ensure applications run efficiently.

- Implement Observability (Logs, Metrics, Tracing) – Utilize tools such as Prometheus, Grafana, OpenTelemetry, and Datadog to gain deeper insights into system health.

- Dynamic Resource Scaling – Leverage auto-scaling policies to adjust resources dynamically based on demand.

- Cost Optimization Practices – Optimize cloud spending using tools such as AWS Cost Explorer, Azure Cost Management, or implementing FinOps best practices.

- Load Balancing and Traffic Management – Use CDN, service mesh (e.g., Istio), and intelligent load balancing (e.g., AWS ALB) to distribute traffic efficiently.

5. Strengthen Security and Compliance from Day One

The broader your architecture, the bigger your attack surface. Build security in from the start, not as an afterthought.

- Zero Trust Security Model – Implement identity verification at every access point using IAM, MFA, and least privilege access.

- Shift-Left Security – Integrate security scanning early in the development lifecycle using tools like Snyk or Aqua Security.

- Runtime Protection – Utilize container security solutions such as Falco, Prisma Cloud, or AWS GuardDuty for real-time threat detection.

- Automated Compliance Checks – Regularly audit and enforce compliance with GDPR, HIPAA, and other industry regulations using policy-as-code tools.

6. Scale With Site Reliability Engineering (SRE)

Site reliability engineering helps balance speed and reliability by combining engineering with operations discipline.

- Set SLOs, SLIs, and Error Budgets – Define Service Level Objectives (SLOs) and track Service Level Indicators (SLIs) to measure system reliability.

- Automate Incident Response – Use tools like PagerDuty or OpsGenie to automate alerting and response for system failures.

- Chaos Engineering – Test system resilience by deliberately injecting failures using tools like Gremlin or AWS Fault Injection Simulator.

- Postmortem Analysis – Document outages and continuously improve systems through structured incident reviews.

7. Embrace Cloud-Native Data Management

Scalability extends beyond applications—it also applies to data storage, processing, and access.

- Use Distributed Databases – Opt for cloud-native databases like Amazon Aurora, Google Spanner, or CockroachDB for enhanced scalability.

- Data Mesh and Data Lakehouse Architecture – Implement modern data architectures that allow seamless data sharing across teams.

- Stream Processing for Real-Time Insights – Utilize Apache Kafka, AWS Kinesis, or Google Pub/Sub for real-time data processing.

- Backup and Disaster Recovery (DR) Plans – Ensure data durability and resilience by implementing automated backups and cross-region replication.

8. Build a Cloud-Native Culture and Upskill Teams

People and processes are as critical as technology in achieving cloud-native success at scale.

- Encourage a DevOps Mindset – Promote collaboration between development, operations, and security teams.

- Invest in Training & Certification – Upskill teams on Kubernetes, cloud platforms (AWS, Azure), and modern DevSecOps practices.

- Enable Internal Platforms for Developer Productivity – Build self-service internal platforms that streamline development and deployment workflows.

- Promote Experimentation and Innovation – Encourage a fail-fast approach to iterate on cloud-native solutions rapidly.

Actionable Steps for Scaling Cloud-Native Across the Enterprise

After aligning on cloud-native principles, the next step is translating them into execution. A successful cloud-native journey demands more than tech: it needs a coordinated plan across systems, teams, and timelines.

Here’s a proven, step-by-step roadmap to guide your enterprise from evaluation to optimization, while tracking ROI and managing risk at every stage.

Step 1 – Audit Your Current State

Start with a detailed inventory of your infrastructure, applications, and team capabilities. Classify workloads based on cloud suitability, whether they can be containerized directly, need refactoring, or must be rebuilt.

Measure ROI by:

- Calculating savings from retiring legacy infrastructure

- Establishing baselines for deployment frequency and lead time

- Tracking Mean Time to Recovery (MTTR) from past incidents

Watch for:

- The trap of lifting-and-shifting complex monoliths

- Gaps in Dev, Ops, or Security readiness

- Low-hanging fruit that can deliver early wins

Step 2 – Plan a Phased Migration Strategy

Avoid the “big bang” approach. Prioritize applications that offer high business value but low complexity. Consider a hybrid strategy to reduce disruption and allow gradual transitions.

Measure ROI by:

- Tracking lead-time reduction for migrated workloads

- Measuring uptime and performance improvements

- Calculating efficiency gains through automation

Watch for:

- Misaligned prioritization of apps or services

- Absence of rollback plans during migration waves

- Feature rollout delays due to missing toggles or flags

Seamless cloud migration is the foundation of a successful cloud-native journey—explore our detailed blog on Cloud Migration Strategy to ensure a smooth and efficient transition.

Step 3 – Lay the DevOps and Automation Foundation

Build robust CI/CD pipelines, infrastructure as code (IaC), and automated testing to support agile, cloud-native delivery.

Measure ROI by:

- Monitoring deployment frequency and failure rate

- Tracking reduction in manual interventions

- Measuring provisioning speed and consistency

Watch for:

- Fragmented toolchains across teams

- Gaps in test automation or pipeline governance

- Poor auditability of code-to-cloud journeys

Step 4 – Embed Observability and Governance Early

Before scaling, implement centralized logging, tracing, metrics collection, and compliance checks. This improves system visibility while minimizing risk.

Measure ROI by:

- Reducing Mean Time to Detect (MTTD) and incident count

- Improving audit and compliance outcomes

- Monitoring severity and impact of failures

Watch for:

- Siloed observability tools or unclear ownership

- Missing service-level objectives (SLOs)

- Manual or fragmented compliance reporting

Step 5 – Optimize Continuously and Scale Confidently

Once cloud-native adoption is in motion, shift focus to performance, cost control, and cross-team efficiency. Formalize FinOps practices to sustain impact.

Measure ROI by:

- Tracking resource utilization and cost-to-performance ratios

- Measuring workload-level spending trends

- Analyzing app latency, availability, and throughput

Watch for:

- Static scaling policies that waste resources

- Fragmented cost visibility across departments

- Missed opportunities to adopt newer cloud-native tools

Build a Future Ready Cloud Native Ecosystem Without Overspending or Delays

Cloud-native transformation isn’t just a tech shift. It’s an organizational evolution. Many organizations struggle with the complexity of microservices, container orchestration, and infrastructure automation. Our team simplifies this transition with a well-defined roadmap that aligns with your business goals. To deliver cost-effective outcomes, our AWS and Azure experts leverage unique FinOps strategies, right-sizing, and automation to ensure you maximize ROI while keeping cloud expenses under control.

As a leading cloud application development company, we provide future-ready cloud strategy to build cloud-native architectures that are flexible, multi-cloud compatible, and optimized for long-term scalability.

Frequently Asked Questions

Q – How do I ensure security in a cloud-native environment?

A – Here are some strategies that you can implement to secure your cloud-native environment:

- Follow Zero Trust Architecture for access control

- Integrate DevSecOps to embed security in the CI/CD pipeline

- Use container security tools like Aqua Security or Prisma Cloud

- Encrypt data at rest and in transit

Q – What are the biggest risks of moving to a cloud-native model, and how can they be mitigated?

A – Here are some risks and their solutions while adopting cloud-native model.

Risks:

- Complexity (microservices management)

- Security gaps (expanded attack surface)

- Vendor lock-in (cloud provider dependence)

Mitigations:

- Use Kubernetes for portability

- Adopt zero-trust security

- Implement multi-cloud strategies

Q – Can cloud-native adoption help reduce IT costs? If so, how?

A – Yes. Cloud-native reduces costs through:

- Pay-as-you-go pricing – Only pay for resources used

- Auto-scaling – No over-provisioning during low traffic

- Lower maintenance – No hardware upkeep or data center costs

- Faster innovation – Shorter development cycles = quicker ROI

Q – What role does Kubernetes play in cloud-native architecture?

A – Kubernetes is the de facto standard for container orchestration, providing:

- Automated deployment & scaling of applications

- Self-healing capabilities to maintain application availability

- Load balancing for optimized resource usage

- Multi-cloud portability for flexible deployment options

Q – What tools and technologies are essential for a cloud-native environment?

A – Here are some prominent tools and technologies that you need to be cloud-ready:

- Containerization: Docker, Podman

- Orchestration: Kubernetes, OpenShift

- CI/CD: Jenkins, GitHub Actions, ArgoCD

- Monitoring & Observability: Prometheus, Grafana, New Relic

- Security: Aqua Security, Prisma Cloud