Data pipeline automation streamlines data movement through self-operating workflows and systems. It transforms manual pipeline management into an automated framework. In this framework, data flows seamlessly between sources and destinations, such as data lakes or warehouses, incorporating necessary transformations and validations along the way.

This automation has become crucial as Business Intelligence, Machine Learning, and analytics demand the processing of massive datasets. With increasingly diverse data sources, traditional ETL processes are no longer sufficient.

Whether you are a CMO, CTO, or product manager, this blog will demystify the concept of data pipeline automation. We explore key benefits, use cases, best practices, common challenges, and solutions to overcome them. So, let’s get started!

Classification of Automated Data Pipelines

Automating data pipelines is essential for managing data flow from various sources to destinations, transforming it along the way. They can be classified based on several criteria, including processing methods, deployment architecture, and transformation approaches. Here’s a detailed overview of these classifications.

Processing Methods

These pipelines can be categorized into two primary processing methods:

- Batch Processing: This method involves collecting and processing data in large batches at scheduled intervals. It is ideal for historical data analysis and regular reporting, where immediate insights are not critical. Batch pipelines are often used in scenarios like daily or weekly reporting from a CRM system to a data warehouse.

- Real-Time (Streaming) Processing: In contrast, these pipelines process data as it arrives, providing immediate insights and enabling applications that require up-to-the-minute information, such as financial transactions or monitoring systems. This type of pipeline is crucial for environments that demand quick decision-making.

Deployment Architecture

These automated data pipelines can vary significantly:

- On-Premises Pipelines: These run on local servers and give organizations complete control over their data and security. They are suitable for businesses with stringent compliance requirements or those that prefer to manage their infrastructure.

- Cloud-Based Pipelines: These utilize cloud services for flexibility and scalability. They reduce maintenance overhead and allow organizations to scale resources as needed without significant upfront investment. Cloud-based solutions are often preferred for their ease of integration with other cloud services.

- Hybrid Pipelines: Combining both on-premises and cloud resources, hybrid pipelines offer the benefits of both architectures, allowing organizations to optimize costs while maintaining control over sensitive data.

Transformation Approaches

The way data is transformed within the pipeline also plays a critical role in its classification:

- ETL (Extract, Transform, Load): It is a traditional approach where data is extracted from different sources, transformed into a suitable format, and then loaded into a destination system (like a data warehouse). ETL is commonly used for structured data processing where transformations need to occur before loading.

- ELT (Extract, Load, Transform): This modern approach loads raw data into the destination first and performs transformations afterward. ELT is particularly effective for big data analytics because it allows for faster access to raw data and leverages the processing power of modern databases.

Additional Classification

Other factors that can influence the classification of data pipeline automation include:

- Micro-batch Processing: A hybrid between batch and real-time processing, micro-batch pipelines process small batches of data at very short intervals. This method balances the two previous types mentioned above by providing near-real-time insights while still grouping data for efficiency.

- Data Quality Features: Modern automated pipelines often include features for monitoring data quality, anomaly detection, error handling, and pipeline traceability to ensure reliable operations across complex workflows.

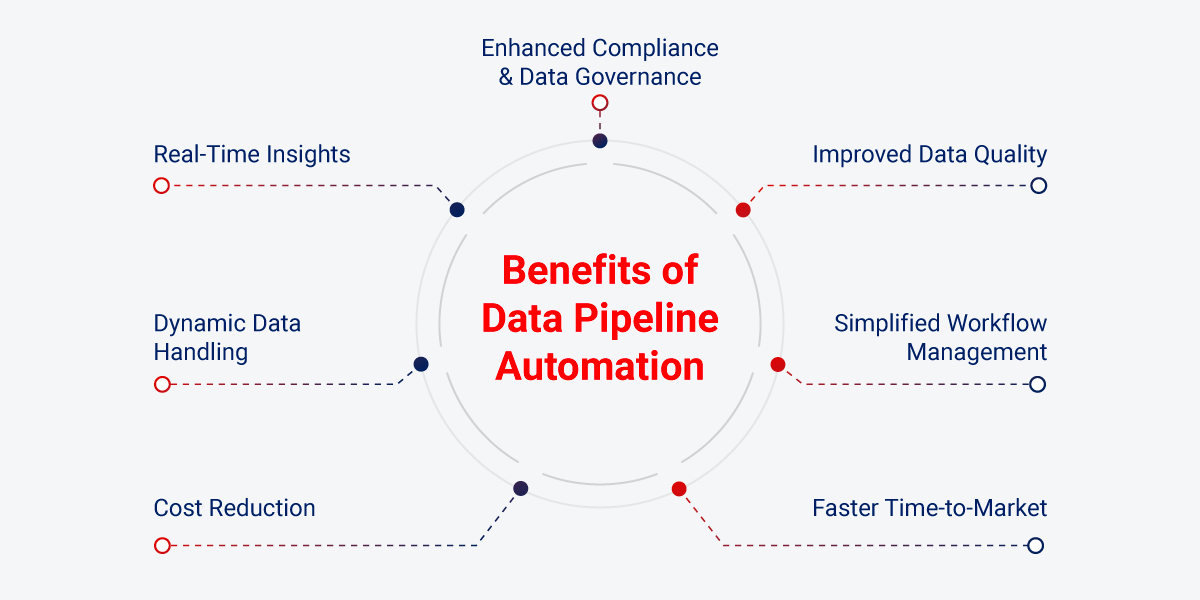

Data Pipeline Automation Benefits

Automating data pipelines offers numerous benefits, whether it be streamlined workflow, dependency management, data governance, or better visibility. Let’s dive deep into some crucial ones.

Enhanced Compliance Data Governance

It enables automated checks and controls that ensure data quality and data integrity. These provide a clear audit trail and a lineage of data movements and transformations. This transparency helps your organization meet compliance requirements, leading to more reliable data for decision-making.

Real-Time Insights

A well-designed data pipeline infrastructure for data-driven decisions enables near real-time data processing and analysis capabilities. This capability is crucial for timely decision-making in fast-paced business environments such as financial trading systems or IoT applications where immediate responses can provide competitive advantages.

Dynamic Data Handling

Automated pipelines can efficiently manage dynamic data changes, such as schema updates or data format changes. This flexibility allows organizations to adapt quickly to evolving business needs without significant disruptions to data processing workflows.

Cost Reduction

Data pipeline automation significantly reduces operational costs by consolidating various point solutions into a single end-to-end platform. Organizations can save a considerable cost annually by minimizing software expenses associated with multiple tools.

Improved Data Quality

Automating data pipelines enforces standardization in processing through consistent transformation rules and cleaning procedures. Built-in quality checks run automatically at each pipeline stage, enabling systematic validation and early error detection. Through pre-defined rules, automation ensures data undergoes consistent cleaning, validation, and transformation before reaching its destination, reducing human error and leading to more accurate and reliable datasets for analysis.

Simplified Workflow Management

Automation streamlines complex workflows by managing scheduling and dependencies between tasks more effectively. This simplification allows for better coordination of data-related activities across the organization.

Faster Time-to-Market

Data pipeline automation accelerates product development by providing immediate access to processed data and enabling rapid iterations. Through automated testing, reusable components, and streamlined deployment, teams can quickly validate features and launch products with data-driven confidence, gaining a competitive advantage in the market.

Data Pipeline Automation Use Cases

Automating data pipelines helps save you time, reduces errors, and boosts productivity. Let us look at the commendable transformation it brings with these use cases.

Enhanced Business Intelligence Reporting

Automated pipelines streamline data extraction, transformation, and loading (ETL) into business intelligence tools. Organizations can ensure timely updates to their dashboards and reports by automating these processes. This provides accurate insights to the stakeholders for decision-making without the delays associated with manual data handling. It offers improved operational efficiency and faster response times to market changes.

IoT Data Processing

In IoT applications, automated pipelines manage vast amounts of sensor data in real-time. Key Use cases include

- Smart Cities: Processing data from traffic, air quality, and energy consumption sensors to optimize urban operations and resource allocation.

- Agricultural Monitoring: Analyzing soil moisture and weather sensor data to enable data-driven decisions for crop management and irrigation control

Comprehensive Customer Insights

Automated data pipelines consolidate and process customer data from diverse sources like CRM systems, website analytics, social media interactions, and e-commerce transactions. This real-time data integration enables:

- Customer Analytics: Creating unified customer profiles by combining transaction history, browsing behavior, and demographic data to drive personalized marketing campaigns.

- Behavioral Analysis: Processing event streams and interaction data to identify customer patterns, predict churn, and optimize customer journey touchpoints.

Data Transformation for Machine Learning Pipelines

Automating the process of transforming raw data into the appropriate format for machine learning models.

- Automatically clean, feature engineer, and normalize data from raw inputs (e.g., images, text, time-series data) for use in ML models.

- Transforming structured data into feature sets for training supervised learning models.

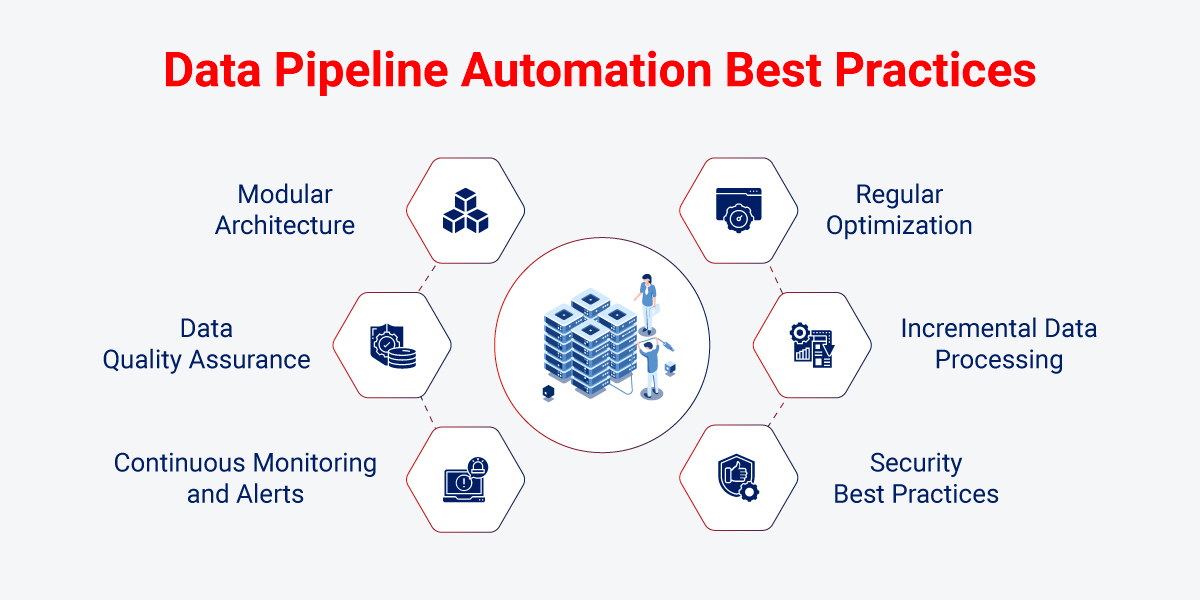

Best Practices for Data Pipeline Automation

Automating data pipelines can seem complex and challenging since it involves multiple interconnected systems and tools. Here are some best practices to ensure your data pipeline automation is robust, efficient, and easy to maintain.

Modular Architecture

Design your pipeline using a modular approach, breaking it into smaller, manageable components (e.g., extraction, transformation, loading). This structure allows for easier updates and maintenance without disrupting the entire system.

Data Quality Assurance

It is crucial to consistently implement and monitor data quality metrics to rectify the issues promptly before they become significant. Check and validate data at every stage and perform data profiling and audits to ensure efficient data quality.

Continuous Monitoring and Alerts

Monitoring pipeline performance is paramount for your data pipeline automation success. Whether it be data flow, processing times, or anomalies, implement a robust monitoring and alerting system to track the performance.

Regular Optimization

Continuously assess the performance of your automated pipeline and optimize based on metrics and feedback. Look for bottlenecks or inefficiencies that can be improved through adjustments in architecture or technology choices.

Incremental Data Processing

Implement incremental processing techniques to handle only the new or changed data since the last run, rather than reprocessing entire datasets. This approach reduces processing time and resource consumption, making your pipeline more efficient.

Security Best Practices

Ensure that security measures are integrated into your pipeline automation processes. Implement encryption for data in transit and at rest and access controls to protect sensitive information from unauthorized access.

Data Pipelines Challenges and How We Help Overcome Them

As businesses pursue their digital transformation journeys, data remains at the core of innovation – from your business strategy & customer experience to marketing, sales & support! So, make no mistake because your ability to leverage this ever-growing volume & variety of data will determine the future success of your business.

But here’s the bottleneck: Managing data pipelines efficiently is not easy!

- Business data is generated in multiple destinations and stored in silos.

- Reports need to be generated from different data sources.

- Most enterprises also struggle with synchronization problems which in turn hampers data consistency.

- With AI gaining traction globally, data sourcing and preparation is becoming a pain point in the absence of automation.

- Running big data workloads in isolation is keeping companies from integrating data-driven ecosystems into their agile & DevOps initiatives.

- Conventional workload automation fails to accommodate the needs of big data workloads & cloud-native infrastructure.

- Missing a single step in the processing data or executing it at the wrong time can result in wasted time and bad data.

- While big data enables fast & informed decision-making, it also poses the problem of integrating big data technologies that cause major operational disruptions and delay the delivery of value.

- Traditional data tools often fail to distribute massive volumes of data to downstream apps in real-time, resulting in slow response times and lost business opportunities.

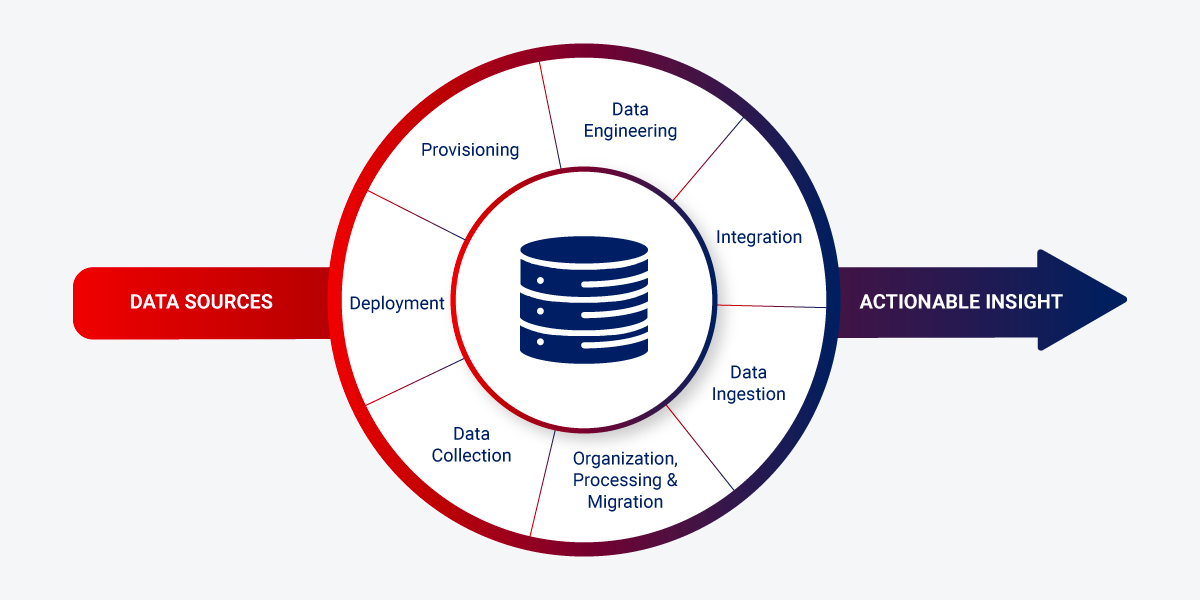

Fortunately, the solution to overcome all these challenges lies in “Data Pipeline Automation”.

It enables transforming massive volumes of data dumps into actionable insights, more quickly. Here’s How

- Provisioning of computing resources & sandbox for running analytics

- Deploy code & ML models for advanced analytics

- Collect data from several apps & endpoints in real-time

- Organize, process & move data securely

- Ingest data into different big data databases

- Integrate third-party schedulers and open-source tools

- And, finally the use of self-service tools to make data actionable for data engineers

Rishabh Software’s Data Pipeline Automation Services Expertise

We have extensive experience in offering end-to-end data automation for organizations across varied sectors. We help you convert your costly & siloed infrastructure into robust big data pipelines for agile business analytics, machine learning & AI.

It is across;

- Architecture Design: Our specialists assess the project you’re planning or even help you review the existing deployment. By applying industry best practices, and assessing design trade-offs, we ensure that your team’s projects are well designed and built.

- Integration with existing data sources & services: Leverage our specialist focus across every stage of the process: from data collection & data processing to ETL, data cleaning & structuring and finally data visualization to build predictive models on top of the data.

- Implementing Cloud Data Warehousing & ETL: Rishabh team can help implement modern data architectures with a cloud data warehouse or data lake. By adhering to industry best practices, data pipeline development significantly reduces the amount of time spent on data quality processes.

Do explore our data engineering services and solutions to learn how we can help design, build & operationalize your modern data projects to accelerate time-to-value and reduce cost-of-quality.

Our Data Pipeline Automation Tool Stack

Amazon AWS

- Amazon EMR

- Amazon Athena

- Amazon Kinesis Analytics

- Amazon Data Pipeline

- Amazon Redshift

- Amazon Aurora

- Amazon DynamoDB

- Amazon RDS

- Amazon Elastic Search

Microsoft Azure

- Azure Data Factory

- Azure CosmosDB

- Azure Data Lake

- Azure Stream Analytics

- Azure Redis Cache

- Azure SQL Data Warehouse

- Azure SQL DB

Open Source

- Hadoop

- Apache Kafka, Apache Drill

- Presto

- Spark, Spark SQL, Spark Streaming, Hive

- Cassandra MongoDB, Hbase, Phoenix, Couchbase,

- Oozie, Airflow

Visualization tools

Conclusion

Data is the cornerstone of decision-making for your organization. Your ability to manage, monitor and manipulate it efficiently has a direct positive impact on your CX, sales, compliance & business success. Sharing comprehensive and accurate data analytics across the enterprise promptly gives you an edge over your competitors and increases your revenue. So, if your data is still being managed by data engineers working in silos with different approaches, then your development environment won’t be able to keep up with the ever-increasing demands of a data-driven business landscape.

Fortunately, automation of the data pipeline changes all that! Team up with us to orchestrate your infrastructure, tools and data while automating processes end-to-end!

Frequently Asked Questions

Q – How Data Pipeline Automation Works?

A – Data Pipeline Automation involves several key steps:

- Data Ingestion: This is the initial stage of gathering data from various sources such as databases, APIs, and applications.

- Data Processing: In this phase, the ingested data is cleaned, transformed, and enriched to ensure it is in a usable format.

- Data Storage: Processed data is stored in databases or warehouses for future access and analysis.

- Data Analysis: The data is analyzed to generate insights that support decision-making, often using machine learning and predictive analytics.

- Data Visualization: Finally, the results are presented through dashboards or reports for easy interpretation.

Automation enhances efficiency by reducing manual tasks, improving data quality and allowing for real-time processing and monitoring.

30 Min

30 Min