In today’s data-driven world, where real-time data pipelines and real-time data processing systems fuel innovation and drive decision-making, the role of data engineering has never been more pivotal. Data engineering uses different tools and methods to create a robust foundation for consistently delivering insights at scale and overcoming big data challenges that companies face today.

As we head into 2026, several key data engineering trends help reshape how we build, manage, and utilize data infrastructure. These trends unveil exciting developments that revolutionize how we handle information, paving the way for more streamlined processes, enhanced decision-making, and more intelligent, responsive systems.

Importance of Data Engineering and Market Overview

Due to the increasing diversity and exponential growth of data, Data engineering is indispensable today for automating and orchestrating data pipelines and ETL (Extract, Transform, Load) processes. Data engineers design, build, and maintain these data pipelines, ensuring that the data is meticulously collected, cleansed, transformed, and made available across the organization in a structured and reliable manner. Data engineering thus allows seamless access to data to help enterprises get value out of their data faster and at scale.

Whether efficiency, managing data influx, or bridging the gap between information and informed decision-making, data engineering helps your business make informed decisions.

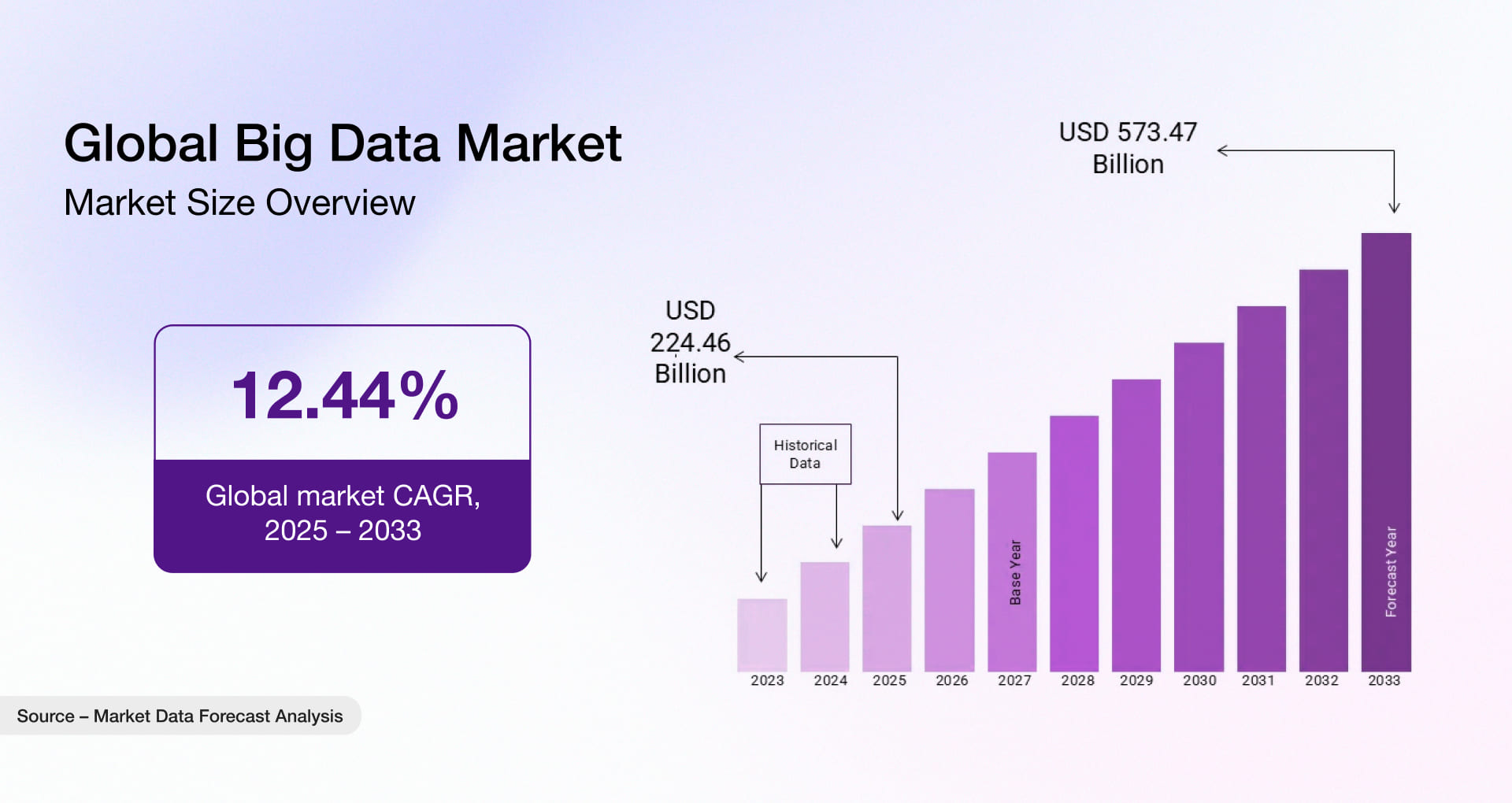

The global big data and data engineering market is projected to grow to USD 325.01 billion by 2033 at a compound annual growth rate (CAGR) of 17.6% [1].

These stats clearly show the growth of data engineering in the upcoming years. As companies adopt digital transformation and evolve in this digital ecosystem, quality data engineering plays a crucial role in the success of your business operations.

Top Data Engineering Trends 2026

The latest trends in data engineering for 2026 underline the increasing importance of scalable, agile, and innovative data management strategies. Understanding and embracing these trends will be crucial for organizations aiming to stay competitive in an increasingly data-centric business landscape, shaping the future of data engineering and its applications across industries.

Cloud-Native Data Engineering

Cloud-native data engineering will emerge as a preferred trend for the ability to offer scalability, agility, and cost-effectiveness. Leading cloud platforms such as AWS, Azure, and Google Cloud offer a scalable and budget-friendly infrastructure for storing and processing data.

2026 will likely see a rise in migration towards cloud-based data storage, processing, and analysis solutions. This shift empowers businesses to leverage advanced computing power, enabling faster data processing and accessibility while reducing infrastructure complexities.

Data Warehouse and Data Lake – Data Vault & Data Hub

The convergence of data warehouses and data lakes is fast gaining momentum, providing a unified platform for storing and analyzing structured and unstructured data. This integration simplifies data management, allowing for seamless data exploration and insights generation. Organizations are expected to invest more in cohesive data architectures that combine the strengths of both data warehousing and data lakes.

These trends will be further accelerated in 2026 with the adoption of Data Vault 2.0 and the Data Hub model, and it’s true and hard to deny.

- Aimed at reshaping the data warehouse, Data Vault creates a layer of structured yet adaptable framework that makes it more efficient and easier to track historical data and enable seamless integration across systems.

- On the other hand, to streamline access to enterprise data and enable seamless governed sharing, the Data Hub is playing a role that cannot be overlooked in the future.

Data Mesh

Data mesh, with its focus on agility, scalability and distributed, decentralized data architecture, is intended to influence data engineering practices in 2026. By promoting domain-oriented, self-serve data platforms, data mesh enables efficient data access while maintaining data quality and governance. This trend aims to decentralize data ownership and facilitate cross-functional collaboration within organizations while also making the system more resilient to failures as data volume grows.

Data Engineering as a Service

DEaaS is a rising trend in data engineering for 2026. It’s like having a team of data experts available without the need to hire and oversee them in your company. Instead of building and maintaining your own data pipelines, data lakes, and complex data infrastructure in-house, you rent access to a managed data engineering platform. DEaaS providers take care of everything from data ingestion and transformation to deployment and monitoring, freeing you to focus on what matters most – extracting insights from your data. DEaaS benefits companies lacking internal capabilities to construct and oversee their data pipelines.

DEaaS is gaining popularity because handling data is getting more complex with more data sources and types. Also, finding skilled people to manage all this data takes a lot of work. DEaaS helps by giving access to experienced data engineers and data scientists without the hassle of hiring them.

Edge Computing

Edge computing is a prominent and increasingly popular data engineering trend for 2026 and beyond. This trend involves processing data closer to where it’s created rather than sending it all to a centralized location. This approach helps reduce latency and enhances efficiency in handling vast data. Using edge computing, devices like smartphones, sensors, and other smart devices can perform data processing tasks locally, making real-time analysis and decision-making quicker and more efficient. As the volume of data generated continues to soar, edge computing stands out as a vital trend in data engineering, offering practical solutions to handle data more effectively at the source.

Augmented Analytics

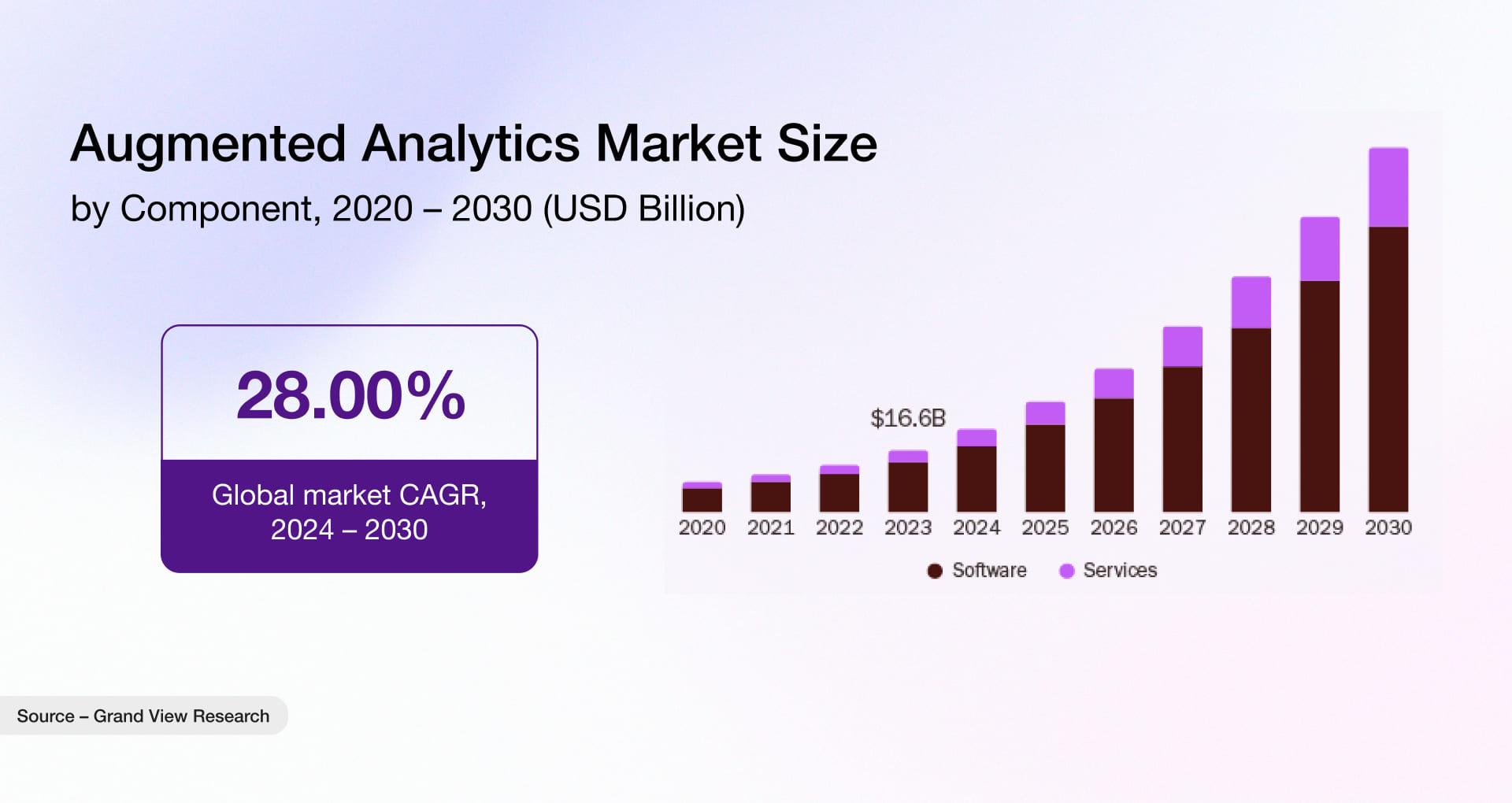

Augmented analytics incorporates machine learning and AI-driven capabilities to assist data engineers and analysts derive more profound insights from complex datasets. The prominent features of augmented analytics include automated data visualization, anomaly detection, and predictive insights. The integration of expanded analytics tools is expected to democratize data analysis, streamline data processing, automate insights generation, and enhance decision-making processes across industries.

Data Automation and AI

Automation connected with AI is set to revolutionize data engineering workflows. Organizations can enhance efficiency and accuracy by automating repetitive tasks like data pipeline orchestration, quality checks, and testing while allowing data professionals to focus on higher-value tasks like analysis and strategy. Automation and AI accelerate data analysis while simultaneously identifying and correcting data anomalies and inconsistencies, enabling real-time insights for faster decision-making.

Big Data Insights

As the adoption of the Internet of Things (IoT) continues to increase, vast amounts of data are being generated by its diverse devices. In 2026 and beyond, enterprises will increasingly embrace big data insights to leverage predictive and prescriptive analytics and AI to extract meaningful patterns for data-driven decisions. This trend is also driving a surge in big data engineering technologies. They are crucial for storing, processing, and analyzing the massive influx of data, enabling organizations to extract valuable insights and optimize operations.

DataOps and MLOps

DataOps and MLOps methodologies continue to gain traction in 2026, emphasizing collaboration, automation, and continuous integration in data and machine learning workflows. These practices enable faster development cycles, ensuring efficiency reliability and scalability of AI and machine learning models in production environments. DataOps and MLOps together represent a fundamental shift in how organizations can unlock full potential of their data to gain a competitive edge in 2026.

Data quality and data integration Reinforced by Data Observability

As time goes on, data pipelines are getting bigger and more complex reaching sizes we’ve never seen before. The effects are clear. It’s true to say that data quality and integration will matter more than ever, and we need to see this as one of the latest trends in data engineering. These days, data quality isn’t just about being correct. It’s about being consistent, complete, on time, and trustworthy throughout its life. Making sure data stays high-quality in real-time is often a huge challenge. This is where Data Observability steps in. Much like how we keep an eye on applications, data observability gives us a clear view of how well our data systems are doing from start to finish. When we bring in data observability, we can watch how fresh our data is, spot unusual changes in volume see if the structure changes, and track where data comes from. This helps us find and fix data problems faster. It lets teams make sure their data stays high-quality and trustworthy while they keep… integrating a multitude of data sources. Data observability ultimately serves as the glue that reinforces integrity in integrated data platforms for contemporary architectures.

Graph Databases and Knowledge Graphs

Businesses are looking to get the most out of their data are finding regular databases don’t cut it anymore. Graph Databases and Knowledge Graphs offer a better way. They let data engineers store, explore, and search connections in real time. This empowers businesses do more than basic number crunching like they can now understand the correlations. In today’s world where data gives businesses an edge, graph tech is creating new ways for data systems to adapt and respond. And being a reason it is future of data engineering undoubtably.

Retrieval Augmented Generation (RAG)

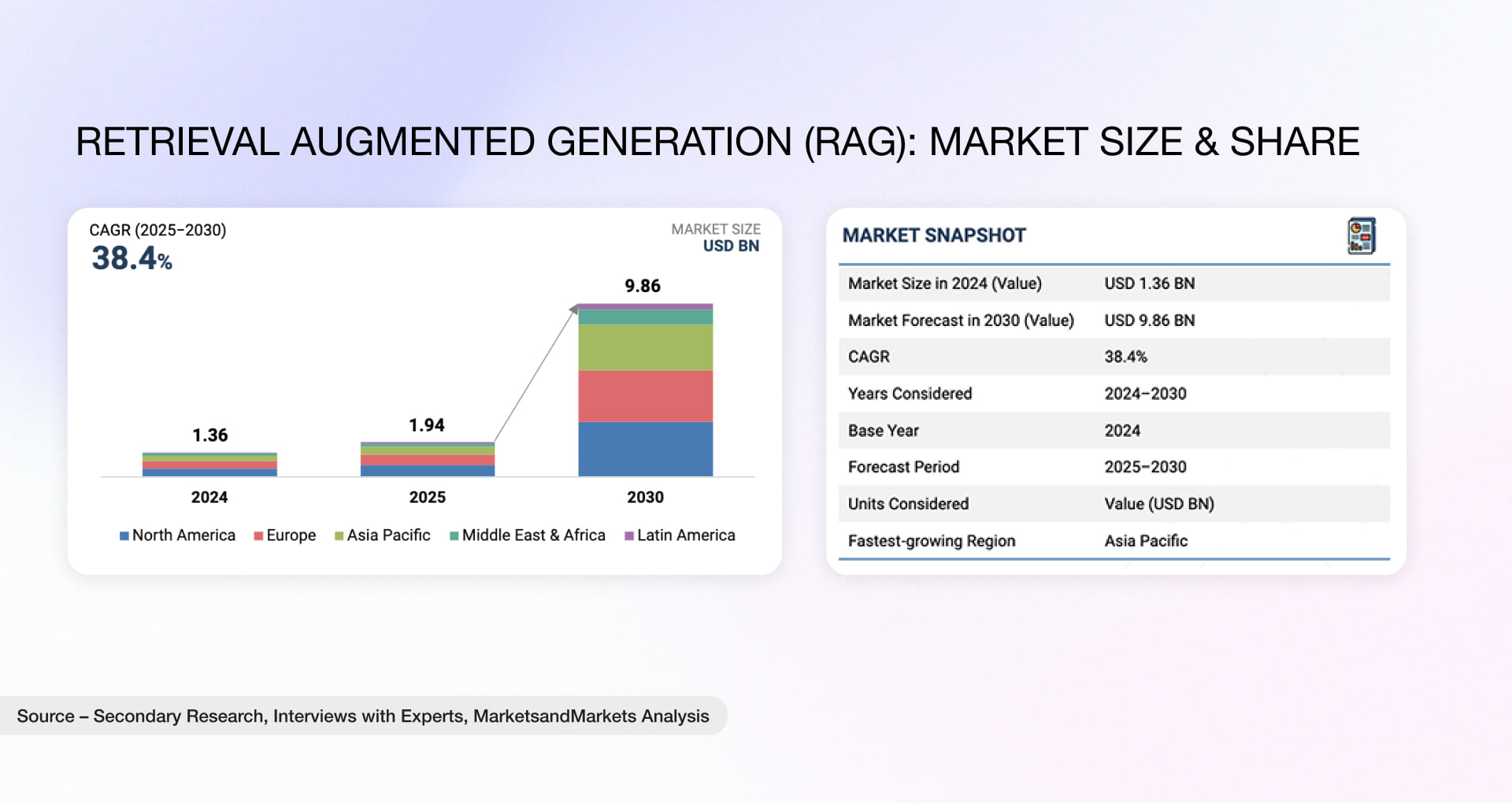

The rising need for real-time, specific insights in Generative AI has turned Retrieval Augmented Generation (RAG) into a vital strategy in data engineering. In conventional AI models, using static data can limit how accurate and relevant the generated content is. RAG, however, combines dynamic data retrieval with large language models (LLMs), providing the most current, relevant information when it’s needed. This method is especially valuable in enterprise settings, where companies need to keep up with rapidly changing data and produce accurate results instantly.

By merging data retrieval from external sources with the generative power of LLMs, RAG allows data engineers to build systems that are not only more precise but also responsive to the ever-changing demands of users and businesses. The integration of real-time information into the generation process ensures that companies can offer up-to-date, relevant answers, all while maintaining speed and accuracy. As data challenges is one way to grow, the use of RAG-powered pipelines will become one of the key trends in data engineering, helping businesses stay ahead in a competitive, data-centric world.

Serverless Data Engineering

In the evolving architecture of data systems, serverless data engineering emerges as a systemic shift, not merely a tooling upgrade and keep ruling as one of latest trends in data engineering. It decomposes the traditional monolith of infrastructure-bound pipelines into discrete, reactive functions, each triggered by data’s own momentum. By abstracting compute orchestration, it liberates engineers to focus on the integrity of data flow and the semantics of transformation. In this model, infrastructure becomes an invisible ally. The pipeline becomes an adaptive mechanism resilient, cost-aware, and tuned for temporal precision. It’s not just an engineering convenience it’s architectural clarity in motion.

Adoption of Open Table Formats

If your team is serious about building a modern data stack, open table formats are worth paying attention to. Tools like Apache Iceberg, Delta Lake and Apache Hudi give you ACID transactions, time travel and schema evolution right on cloud storage.

In a nutshell, you get a data lake with the reliability of a warehouse, without being locked into one vendor. It helps you keep control of your data, makes sharing across teams easier and keeps your analytics stack flexible when new engines and tools show up. The change to open table formats will be a significant factor as you plan for 2026.

Domain-Specific and Specialized Language Models

You have probably already worked with generic LLMs. They are powerful, but they do not always “get” your business. That is where domain-specific and specialized language models come in.

When you train or fine-tune models on industry data, they start to understand your terms, your regulations and your real-world workflows. For a data engineering team, that means better classification, cleaner entity extraction, clearer documentation and even smarter assistance while writing pipeline code.

As you move through 2026, expect more of your AI work to look like this. Not just “use an LLM,” but “use the right model for our domain” and plug it directly into your data workflows.

Embracing Zero-ETL Architectures

Think about how much effort your team spends just moving data around. Exporting, transforming, loading, fixing breaks and repeating it all again. Zero-ETL architectures aim to cut a lot of that overhead.

Instead of running heavy ETL jobs, you let analytics services read from operational stores or shared storage in near real time using built in cloud integrations. You get fresher dashboards, fewer moving parts and a simpler stack to maintain.

As you plan your architecture for 2026, zero-ETL is worth a serious look. It will not replace every pipeline, but for many use cases it can reduce latency and complexity, as long as you back it with solid governance, data contracts and observability.

The Rise of Synthetic Data in Data Engineering

If you have ever struggled to get enough clean, safe data for testing or model training, synthetic data is going to feel like a relief.

By using statistical techniques and generative AI, you can create realistic but anonymous datasets that behave like production data. Your team can test pipelines, simulate rare edge cases, rebalance skewed datasets and share data with partners without exposing sensitive information.

With privacy rules getting tighter and labeled data still expensive and slow to collect, synthetic data will keep gaining importance through 2026. It is a very practical way for you to move faster without putting customer trust at risk.

Integration of AI and Machine Learning

You can think of AI in data engineering as a quiet co-pilot that sits inside your platform. It helps tune queries, predicts which pipelines might fail, spots schema changes and flags suspicious data quality issues before they hit your dashboards.

Instead of manually chasing every fire, your team gets early warnings and smart suggestions. Over time, the platform learns from its own history and becomes easier to run.

With the expansion of your data estate in 2026, the combination of AI and machine learning will be a game changer. It will allow your team to maintain a small size, carry out repetitive checks automatically and spend their time on architecture, design and business impact instead of trouble fixing constantly.

Real-time Data Streaming

Event stream processing stands out as a major trend in 2026, due to its real-time data processing capabilities, scalability, and role in event-driven architectures. Facilitating the handling of massive data volumes, streaming frameworks like Apache Kafka and Apache Flink enable businesses to process information as it flows in, which is crucial for timely decision-making.

Its significance extends to IoT and edge computing, where real-time analysis of data generated by these devices becomes essential. Moreover, integrating real-time data streaming with AI/ML fosters adaptive systems, while its impact on various sectors, including healthcare, e-commerce, and retail, helps deliver personalized experiences to consumers.

Top Data Engineering Predictions for 2026

The upcoming years are expected to bring key advancements and transformative shifts in data engineering. These predictions outline the significant forecasts anticipated in 2026 and beyond.

#Prediction 1 – Focus on Data Security and Cybersecurity

Intensified concerns about data security will lead to a greater emphasis on cybersecurity measures within data engineering. Strengthening data encryption access controls and implementing robust security protocols will be critical to safeguarding sensitive information against evolving cyber threats.

#Prediction 2 – Data Governance and Ethical Considerations

Enhanced focus on data governance and ethical considerations is anticipated to become a foundation of data engineering best practices. With the increasing emphasis on privacy regulations and ethical use of data, data engineers must integrate robust governance frameworks into their processes to ensure compliance and build trust with consumers and stakeholders.

#Prediction 3 – AI Becomes the Data Engineer’s Copilot

As AI co-pilots become common in enterprises in 2026, Artificial intelligence is fast becoming a helpful partner for data engineers. It can automate repetitive tasks such as data ingestion, cleaning, and automate data pipelines, generate code snippets and identify problems in data pipelines. This helps engineers focus on solving critical issues and making their data systems better. AI working alongside engineers makes managing data more accessible and more efficient.

#Prediction 4 – Embracing Data Contracts

Data contracts help improve transparency, trust, and collaboration in Data Management. In today’s complex data ecosystem, data contracts have the power to revolutionize data management, establishing clear, well-defined formal agreements between data producers and consumers, enabling trust, and enhancing collaboration. These contracts offer clear guidelines on ownership, quality standards, and usage terms, ensuring everyone understands expectations and operates on the same page. It helps in reliable data production, streamlines efficient data exchange, promotes cross-team collaboration, and mitigates risks of misuse.

Although data contracts are still new for the industry, their usage is expected to significantly increase within the next year or so, becoming more prevalent and widely implemented.

Why Choose Rishabh Software as Your Trusted Data Engineering Partner?

As your trusted partner in data engineering, Rishabh Software offers extensive expertise and a comprehensive range of services. We cover the full cycle of data management, including acquisition, cleansing, conversion, interpretation, and deduplication. This ensures that your data is handled by experienced data engineers comprehensively from start to finish, guaranteeing accuracy and reliability. With hands-on expertise in AWS and Azure platforms, Rishabh Software is well-equipped to harness the benefits of cloud technologies for efficient data processing and management.

Our extensive experience, and proficiency in cloud technologies, comprehensive data management capabilities, and expertise in modernizing data infrastructure make us a reliable and trusted data engineering company for all your data engineering needs. We apply an efficient and smart approach to migrate business data from on-prem legacy systems to modern databases, including cloud storage infrastructure. This ensures that your data environment is optimized and transformed for enhanced performance and real-time exploration and analysis.

Takeaway: The Future of Data Engineering is Dynamic

The data engineering trends 2026 mentioned above show a move towards using more innovative technologies like AI and machine learning to handle information more efficiently.

An even more significant shift is seen in how data is processed more quickly through edge computing, making things quicker and more responsive. The future of data engineering is data-driven, faster, more innovative, and easily accessible to all.

Footnotes:

1. https://www.marketdataforecast.com/market-reports/big-data-engineering-services-market

30 Min

30 Min