You can spin up a GenAI demo in a week. Scaling that same experience into daily work is where reality shows up: hallucinations that sound confident, answers that can’t be traced back to a source, and “helpful” assistants that accidentally expose restricted content.

Gartner predicts that at least 30 percent of gen AI projects will be abandoned after proof of concept by the end of 2025, with poor data quality and weak risk controls among key reasons.

Implementing GenAI is not about picking the “best model.” It is about building an AI-ready data ecosystem. It must deliver trusted context on demand. It must respect user permissions. It must work across warehouses, documents, and knowledge.

In this blog, you’ll get a practical reference architecture, a trust layer that enforces identity, permissions, freshness, and evidence, plus an operating model and FinOps guardrails so your GenAI copilots and agents stay reliable and cost-controlled in production.

Why AI Initiatives Fail Without AI-Ready Data Architecture

Most AI failures are not model failures. They are context failures.

When enterprise teams push GenAI into real workflows, the model becomes the last mile. The foundation is your data ecosystem. Initiatives break for predictable, non-AI reasons:

- Unreliable context: missing lineage, stale data, conflicting definitions, unverified sources

- Governance gaps: unclear ownership, inconsistent access policies, weak auditability

- Weak runtime controls: no guardrails for retrieval, permissions, prompt and response handling, or output risk

This is not an argument against GenAI. It is a plan to make GenAI deployable safely, repeatedly and economically by engineering the data ecosystem underneath it.

If you want assistants, agents, and analytics copilots that behave consistently, you need an AI-Ready Data Architecture that delivers trusted context on demand. Our readiness assessment helps you pinpoint where your ecosystem fails before your pilot expands into a business-critical workflow. You can follow this AI readiness assessment blog for a structured approach to planning these phases.

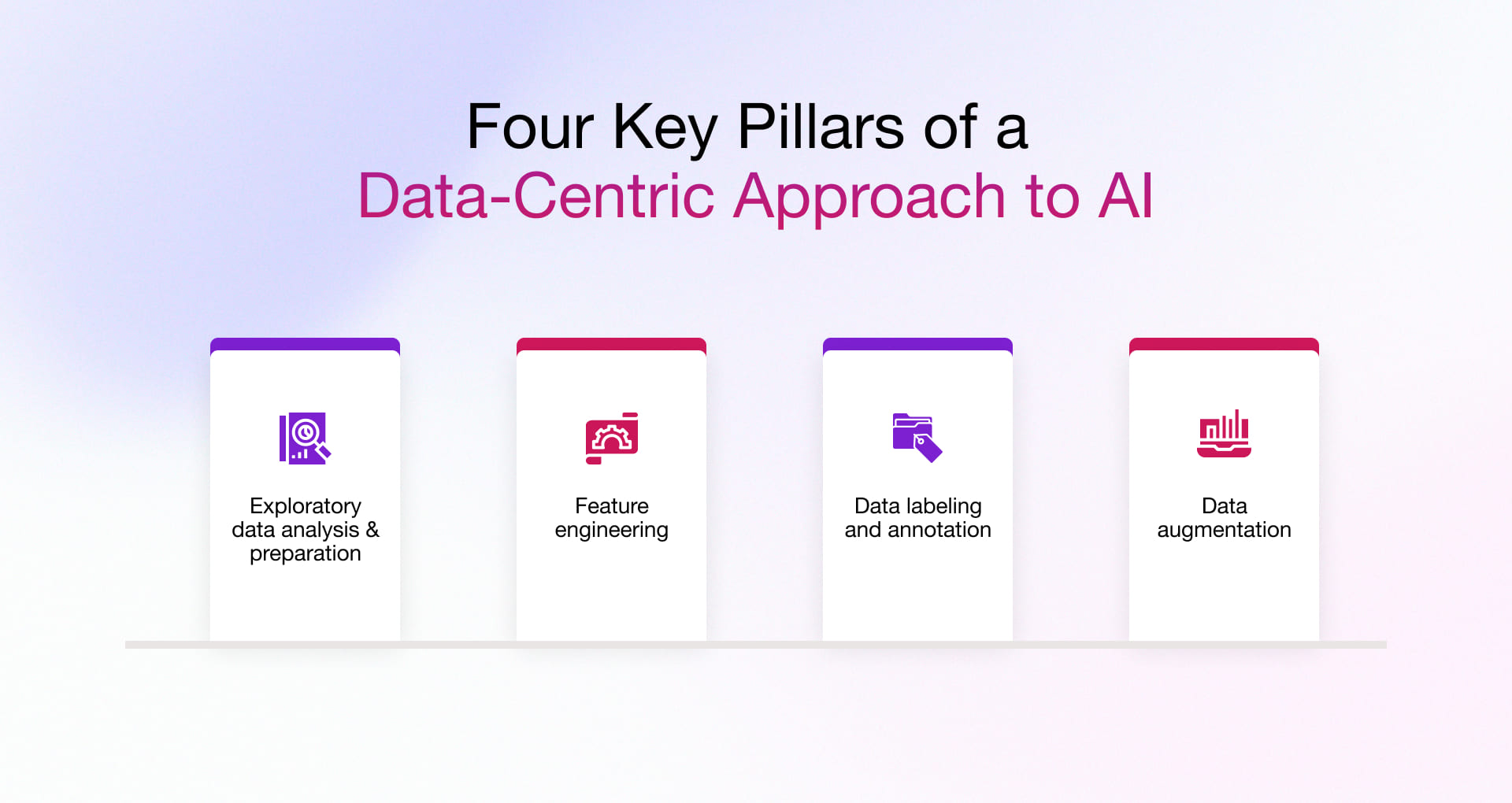

Four Key Pillars of a Data-Centric Approach to AI

GenAI succeeds or fails on data. If your data is messy, outdated, or access is unclear, your copilot will produce unreliable answers. Use these four pillars to make GenAI outputs accurate, traceable and safe.

1) Exploratory data analysis (EDA) and data preparation

This makes data and documents clean, current and usable for retrieval.

- Inventory your sources (tables, SharePoint, Confluence, PDFs, tickets) and assign an owner for each.

- Remove junk which includes duplicates, outdated versions, broken links, empty files, boilerplate headers and footers.

- Standardize formats including dates, IDs, units, naming conventions.

- Chunk documents smartly and split by headings and sections so the model can fetch the right passage.

- Keep the source link, version and last-updated date so answers can cite evidence.

2) Feature engineering

Helps the system find the right supporting content fast.

- Add consistent metadata to every item: domain, product, customer, region, effective date, owner, sensitivity.

- Use hybrid search (keyword + semantic) so you capture exact terms and meaning.

- Boost trusted sources: mark approved or authoritative content and rank it above drafts and notes.

- Create a small “golden questions” set (20 to 50 real questions) and track whether retrieval pulls the right sources.

3) Data labeling and annotation

Reduces ambiguity and enforces governance.

- Label content by type and status: policy, SOP, contract, FAQ; approved vs draft.

- Label access: public, internal, confidential, plus role-based visibility.

- Tag key entities including products, customers, processes, teams, so retrieval can filter and rank better.

- Add human review for high-risk answers (legal, finance, HR) and feed fixes back into labels and rules.

4) Data augmentation

Fills gaps and tests safely without corrupting production truth.

- Generate synthetic test cases to validate prompts, guardrails, and workflows without exposing sensitive data.

- Enrich context with reference data (taxonomy, product catalog, regulatory clauses) and link it to internal terms.

- Create do-not-use sets (restricted docs, outdated policies) to confirm the system blocks them correctly.

- Track drift: update indexes and metadata when policies, templates, or products change.

Do this first: pick one GenAI use case, certify a small set of trusted sources, apply these four pillars, and measure retrieval quality before you scale.

Core Principles of AI-Ready Data Architecture

An AI-Ready Data Architecture works when you treat data as a product, apply standards across formats, stabilize meaning, and embed governance early. These principles turn “available data” into AI-ready data that AI can use without guessing.

1. Treat Data Like a Product So AI Stops Guessing

If you want GenAI to deliver in real workflows, utilize data like a product. A product has an owner, users, a roadmap, and disciplined change control.

Strong data products have:

- A clear owner who stays accountable

- Known consumers who rely on it

- Reliability targets for freshness, quality, and availability (SLOs and SLAs)

- Versioning so teams track changes

- Discoverability so people and AI can find the right dataset

- Data contracts so definitions do not change without warning

- A deprecation window so teams can migrate before breaking changes

Real-world scenario:

A finance copilot is asked, “What’s our revenue?” It pulls figures from two tables. One nets out discounts; the other does not. Both appear legitimate, so the assistant answers with confidence. The board meeting derails when the number does not align with the agreed definition.

One approved definition of revenue, published as a trusted data product, prevents this. That is AI-ready data in practice: owned, observable, versioned and consistent.

2. Apply Unified Standards to Structured and Unstructured Data

AI breaks when your rules stop at the warehouse. If you govern tables tightly but treat documents loosely, GenAI retrieves the wrong content or shows the right content to the wrong person.

AI-ready standardization requires:

- One set of rules for quality, lineage, auditability, and access across all data types

- Document pipelines with connectors, deduplication, versioning, lifecycle controls, and entity linking

- Permission propagation into indexing and retrieval so access stays consistent end to end

A smart move: Tag documents with the same sensitivity labels you use for tables. Propagate those permissions through your document pipeline. If a user cannot open a file, the AI must not retrieve it.

3. Build a Semantics and Metadata Foundation

Most failures start with a simple question: what does this field mean? If teams cannot answer that clearly, AI cannot answer reliably either.

Minimum semantics you need:

- Canonical entities and metric definitions

- Domain tags and sensitivity classification

- An enforced semantic layer that teams use in production, not just a glossary

Metadata works like a traffic system. It sets the signs, lanes, and rules that keep everything moving safely. Without it, more data and more users create confusion, not progress.

4. Embed Governance Early

Governance should feel like guardrails, not gates. If you bolt it on later, teams route around it. If you build it in early, it scales with less friction.

AI-ready governance includes:

- Identity-aware access

- Consent and privacy controls

- Retention and lifecycle policies

- Purpose-based usage boundaries where needed

Here is a practical view of what “AI-ready data” means beyond theory with proven principles in place to help you implement a blueprint that scales delivery without eroding trust.

AI-Ready Data Architecture Blueprint for Scalable Delivery

Think of this blueprint as a data assembly line: Ingest → Store → Transform → Serve → Consume. Each station needs checks. If any station lacks controls, defects move fast and AI amplifies the damage.

This AI-Ready Data Architecture blueprint shows how data moves through your ecosystem and where reliability and governance must live so AI can scale without breaking trust.

1. Ingestion patterns

Ingestion is where many AI issues begin: silent duplication, missing updates, lost permissions, weak versioning, and uncontrolled schema changes. These failures often do not throw errors. They quietly degrade trust.

AI-ready ingestion patterns include:

- Batch ingestion with reconciliation, replay, and idempotency

- CDC for near-real-time operational truth

- Streaming for telemetry and event data

- Unstructured ingestion with dedupe, lifecycle, entity linking, and permission propagation

- Schema definition, validation, and controlled evolution to prevent downstream breakage

Business outcome: fewer mystery incidents and faster onboarding of new sources without destabilizing downstream consumers.

2. Storage and Compute Framing

Copying all data into one place is often impractical and expensive. You can reduce friction with a unified approach while still enabling a trusted view. Separation and control matter more than a single location:

- Raw stays raw

- Curated stays validated

- Served stays governed

Common patterns include:

- Lakehouse: open formats with separation of storage and compute for scale

- Warehouse-first: governed query layer for consistent metrics and controlled access

- Hybrid: domain-driven mix with a shared metadata plane to keep governance consistent

Business outcome: easier auditability, more predictable access control, and fewer shadow copies.

3. Transformation and Contracts

Transformation is where reliability becomes real. GenAI exposes transformation weaknesses because it depends on stable inputs.

AI-ready transformation discipline includes:

- Standard schemas and validation tests

- Quality SLOs tied to business criticality

- Data contracts to prevent breaking changes and metric drift

- A semantic layer for canonical entities and metrics

For instance, a support ticket category changes from “Billing Issue” to “Billing.” Dashboards adapt slowly. Retrieval does not. Contracts and tests catch the change before it ships.

4. Serving and consumption surfaces

Serving is where data becomes decisions. AI can cause real damage if retrieval ignores identity or evidence.

AI-ready serving surfaces include:

- BI: governed self-serve access with consistent metrics

- APIs: operationalized data for apps and workflows

- Vector or embedding index: governed retrieval with identity-based filters and citation-friendly chunking

- Automation: audit-ready workflow triggers with traceable inputs and outputs

A Practical example: An HR assistant should answer “What is our leave policy?” with citations to the current approved policy source. It should refuse if it cannot find current, approved sources. A blueprint defines flow. The next layer makes that flow safe at enterprise scale.

The Trust Layer: Governed AI-Ready Data for Enterprise Scale

GenAI expands reach and it expands risk. The Trust Layer turns AI-ready data into governed, enterprise grade context by enforcing identity, permissions, freshness, and evidence end to end. In an enterprise AI-Ready Data Architecture, this layer keeps BI, APIs and AI experiences consistent under pressure.

1. Identity and ACL propagation

If identity breaks, governance breaks.

Non-negotiables:

- Preserve auth context across storage, BI, APIs, indexing, and retrieval

- Enforce permissions at retrieval time, not only at storage time

Illustration: A user lacks access to a contract folder. The assistant summarizes those contracts anyway because indexing ignored ACLs. You prevent this by propagating permissions and enforcing them during retrieval.

2. Deterministic Enforcement and Audit Logging

Policies cannot be best effort. They must be deterministic and verifiable.

Your audit trail should capture:

- Who requested what

- Which policy applied

- What sources were used (evidence links)

- What was returned or denied

- When it happened

When leaders ask, “Why did the assistant say this?” you should show a chain of evidence, not a guess.

3. Traceability and Reproducibility

To make AI behavior defensible, capture:

- Data product version

- Retrieval configuration

- Prompt and policy configuration

- Model and app versions

- Outputs with evidence links

Business outcome: repeatability, rollback, and defensible AI behavior.

4. Deny on ambiguity: the policy that prevents trust collapse

Adopt a simple principle: when required signals are missing or conflicting, deny.

Apply deny on ambiguity to:

- Unclear classification: treat as restricted

- Missing lineage or provenance: do not use as GenAI context

- Freshness SLO violation: refuse or route to a fallback

- Conflicting metric definitions: block until resolved

- Entitlement uncertainty: deny retrieval

Make denial usable:

- Explain the reason (for example, freshness SLO failed)

- Offer safe alternatives (last certified snapshot, request access, escalate to human)

- Log the event for review and remediation

With trust controls in place, you can run AI in production with fewer surprises.

Taking AI-Ready Data from Pilot to Production

Pilots are successful when usage is low and expectations are lenient. Production raises the stakes with more users, more edge cases, more audits and more cost scrutiny. So, if you want to move from pilot to production smoothly, treat reliability as an operating discipline instead of a one-time build.

Operating model: reliability is a team sport

Architecture does not fail on its own. Ownership fails. Change control fails. Runbooks fail.

Platform team vs domain teams

- Platform team owns infrastructure primitives, observability, policy tooling, and enablement

- Domain teams own data product definitions, quality ownership, access rules, and SLOs

Example: The platform team ships catalog and observability. The sales domain owns the Account definition and SLOs. Both roles matter.

Data product contracts make reliability enforceable

Each data product contract should specify:

- Owner and escalation path

- Versioning rules

- Access policy

- Quality SLOs

- Audit requirements

- Deprecation notice window for breaking changes

Adopt a baseline rule: set a deprecation window for any breaking change. Treat it like an API version bump, with clear versioning and advance notice. This prevents silent shifts that break retrieval and ripple into downstream decisions.

AI operations: keep production AI stable

Production AI is a living system. It changes as data changes. You need operational discipline to keep outcomes predictable.

Monitor what matters: Do not only track whether pipelines succeeded. Track:

- Freshness and completeness

- Schema drift and distribution drift

- Retrieval behavior (hit rate, stale context, denied retrievals)

- Permission leakage signals

- Latency and error rates across request to retrieval to response

Logging and human in the loop for high risk flows

Operational telemetry should capture the full chain:

- Request to retrieval to response to evidence

- Exceptions routed for review where needed

Example: an agent proposes a credit decision or refund exception. Route it to human review and attach retrieved evidence.

Safe failure and escalation

Design for safe degradation, not silent failure:

- Stale data: refuse or route to human

- Policy ambiguity: deny and create a ticket

- Latency spikes: throttle and degrade gracefully

- Cost spikes: rate limit or cap workloads

Add a lightweight incident RACI so response does not stall when trust is on the line.

Checklist: AI-ready data ecosystem readiness

Use this checklist during an AI readiness assessment to identify gaps before you scale.

1. Architecture

- Standard ingestion patterns (batch, CDC, streaming) with validation gates

- Unstructured pipelines with dedupe, versioning, lifecycle, and permission propagation

- Storage zones separated (raw, curated, served) with governed access

- Transform CI/CD with tests, promotion workflows, and rollback patterns

- Serving surfaces include governed BI, APIs, and identity aware retrieval

2. Trust layer

- Catalog coverage for critical datasets and documents

- Lineage for priority pipelines and key data products

- Quality checks with owners and thresholds

- Deterministic access enforcement and audit logs

- Deny on ambiguity implemented for missing or uncertain signals

3. Operating model

- Platform vs domain responsibilities defined

- Data product contracts, SLOs, and deprecation windows

- Incident playbooks and escalation paths

4. AI operations

- End to end telemetry: request to retrieval to response to evidence

- Monitoring for drift, freshness, permission issues, and latency

- Human in the loop for high risk exceptions

5. FinOps

- Budgets by domain and tiered service levels

- Caching, rate limits, and lifecycle policies

- Unit economics tracked per data product and per workload

Framework: implement in phases without stalling delivery

1. Diagnose: map highest value use cases to the data products and risk surfaces that support them

2. Stabilize: certify critical data products (definitions, tests, lineage, ownership)

3. Operationalize: DataOps CI/CD plus SLOs plus incident playbooks

4. Control runtime: identity aware retrieval plus deny on ambiguity plus audit evidence

5. Scale safely: FinOps guardrails plus tiered service levels

Next you need to apply cost guardrails so scale stays sustainable.

FinOps Guardrails for AI-Ready Data Architecture

AI workloads create new cost curves. Indexing, retrieval, observability, retries, and serving can grow until finance asks hard questions. In an AI-Ready Data Architecture, FinOps keeps experimentation sustainable and production predictable.

1. Where costs accumulate

- Ingestion retries

- Storage growth

- Compute

- Governance tooling

- Observability

- AI serving surfaces

2. Proven and Practical controls that work

- Budget by domain and workload

- Tier serving (gold vs sandbox)

- Caching and rate limits

- Batch heavy enrichment where possible

- Limit retrieval depth for low risk use cases

- Cache common answers with citations

3. Unit economics to track

- Cost per data product per month

- Cost per 1,000 queries or workflows

- One ROI metric per use case (time saved, tickets deflected, cycle time reduced, error rate reduced)

FinOps prevents waste. It also forces clarity on what you scale and why.

How Rishabh Software Helps You Achieve AI Readiness with AI-Ready Data Architecture

Most teams do not struggle to build a pipeline. They struggle to build AI-ready data that stays reliable after go live when adoption spikes, policies evolve and GenAI starts driving real decisions.

Where we support teams:

- Building modern data platforms, scalable pipelines, lakehouse and warehouse patterns, semantic layer, and governed serving

- Identifying aware access, permission propagation into indexing and retrieval, audit logging, and policy enforcement patterns

- DataOps CI/CD, automated testing, observability, drift detection, incident response, and rollback readiness

- Data product contracts, SLOs, ownership model and change and deprecation playbooks

Many GenAI use cases also depend on documents, tickets, PDFs, policies, and knowledge bases. If unstructured content is part of your roadmap, we can also help you Extract Optimal ROI from Unstructured Data across enterprise documents and content pipelines using AI.

If you want to move from demos to production, book a discovery call with us. We will run an AI assessment and evaluate your ecosystem against this AI-Ready Data Architecture blueprint, including the trust layer, operating model, AI operations reliability, and FinOps guardrails.

And if you need hands-on support to implement this on the Microsoft ecosystem, explore our Microsoft Data and AI Services. As a Microsoft Solutions Partner, we help you build a stronger data foundation and accelerate adoption using Microsoft Azure and Microsoft Fabric. We also enable GenAI safely with Azure OpenAI Service, Azure AI Search for retrieval-augmented generation, and Copilot-focused implementation and adoption support, with governance and security designed in.

FAQs

Q: Why does GenAI still give wrong answers when the warehouse looks fine?

A: Because fine is not a runtime guarantee. Missing semantics, stale context, or permission misalignment causes retrieval to feed the model the wrong evidence or evidence the user should not see.

Q: What risk do teams overlook most often?

A: Permission leakage through indexing and retrieval when ACLs do not propagate and enforcement does not happen end to end.

Q: What does deny on ambiguity look like for business users?

A: A clear refusal with the reason, a safe fallback (last certified snapshot, approved source, or access request) and a logged trail for remediation.

Q: How to prevent AI spend from escalating unpredictably?

A: You should treat AI cost like total cost of ownership (TCO). Track the full cost across infrastructure, tooling, engineering effort, governance, and variable usage including token and retrieval costs. Then apply guardrails where growth typically spikes, set budgets by domain and use case, tier workloads (sandbox vs production), use caching and rate limits for high-volume paths. We can help you enforce lifecycle controls for data and indexes and monitor unit economics tied to one business outcome per use case so you scale only what delivers value. For a better breakdown of a practical TCO approach and the cost drivers that teams often miss, read this blog on GenAI-Aware Total Cost of Ownership for Digital Products.