Learn how Rishabh Software leveraged its Big Data expertise to develop a multi-node data architecture solution for a Europe-based OOH and digital advertising company, enabling the management of millions of booking requests per hour and improving booking data processing time by 20% to enhance customer service and profitability.

Objective

Our Europe-based out-of-home & digital advertising customer wanted to improve its advertising booking process by minimizing the booking time for its various inventory located all over Europe. A proprietary booking application was used for creating millions of ad bookings at a frequency of each hour and were facing high time lag which increases the booking time to process. Rishabh Software was selected as the preferred Big Data Solution provider to improve its booking data processing time by developing a multi-node data architecture solution to handle millions of booking request received each hour.

Challenges

- Longer ad booking process

- Processing data in real time

- Inaccurate booking reports

- Improving response time

Approach

Rishabh Software’s big data team analyzed the current system architecture and created a proof of concept to handle the booking data processing challenge. Agile methodology was used to develop the multi-node big data architecture solution offering RDD (Resilient Data Distribution) to manage millions of ad booking requests per hour.

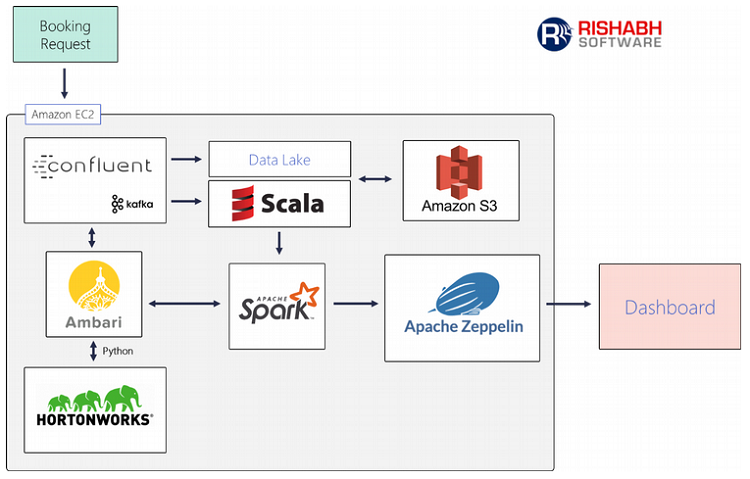

Confluent Kafka was used to handle booking request in real-time, which created a data lake supported by Cassandra which was further integrated with AWS Scala to streamline data processing. Confluent Kafka helped to lead both consumer and producer operations through serialization & deserialization concept. Apache Spark was also used to handle real-time data requests. Moreover, the overall infrastructure was supported by Amazon EC2 by implementing master-slave architecture for managing various instances backed by AWS Kinesis and AWS S3.

Lastly, Hortonworks Distributed Platform (HDP) was used as a sandbox to monitor various services in real-time, and Python language was used to interact with the multi-nodal big data architecture solution using Apache Ambari Server.

A team of 10+ big data engineers was deployed for the project which accessed the customer’s infrastructure through offshore development center. A dedicated QA team was assigned to perform Big Data testing covering performance testing.

Learn more about how we helped a digital advertising enterprise that wanted to achieve optimal use of its digital inventory using an inventory booking solution based on the advertising requirement – Digital Ad Inventory Management Solution Built Using Kafka.

Business Benefits

- 20% improvement in data processing

- Improved profitability

- Scalable solution architecture

- Accurate reporting

Industry Segment

Out of Home Advertising

Customer Profile

Europe-based digital & out-of-home advertising enterprise

Technology and Tools

- Confluent Kafka

- AWS Kinesis

- AWS S3

- AWS EC2

- Auro Schema

- Ambari Server

- Python

- Scala

- Apache Zeppelin

- JSON