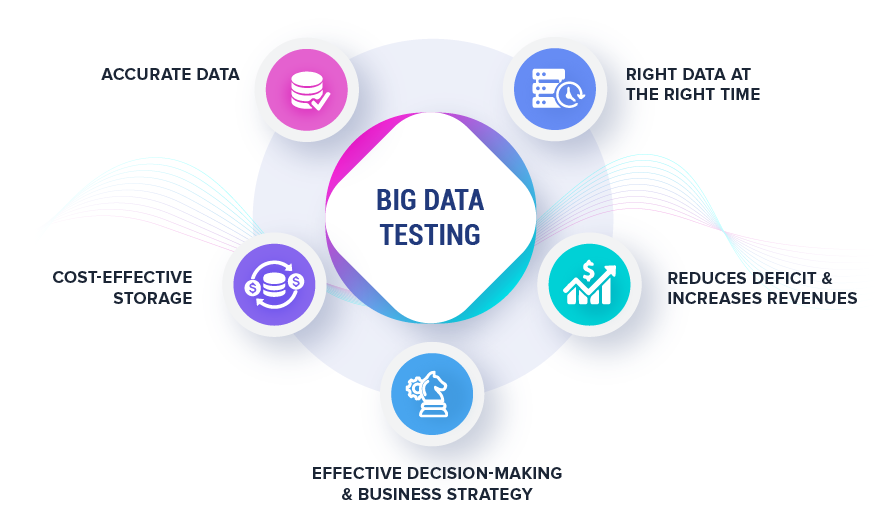

Enterprises today are constantly looking for ways to leverage the power of Big Data in order to gain a competitive advantage and make informed decisions. Referred to as a huge set of raw data with valuable information, it needs careful design and testing to ensure desirable outcomes with applications. And, with the exponential growth in the number of big data applications in the world, their testing is crucial. Big data testing is referred to as the process of performing data QA. It could be related to database, infrastructure & performance & functional testing.

Through this article, we’ll help you understand the types, tools, and terminologies associated with big data testing.

What is Big Data Testing?

It can be defined as the process that involves checking and validation of the big data application functionality. We all know that Big Data is a collection of a massive amount of data in terms of volume, variety, and velocity that any traditional computing technique can standalone handle. And, testing of the datasets would involve special testing techniques, remarkable frameworks, brilliant strategy, and a wide range of tools. The goal of this type of testing is to ensure that the system runs smoothly and error-free while retaining efficiency, performance, and security.

Key Benefits of Big Data Testing

Big Data Testing Components

Test Data

Data plays a primary role in testing to provide an expected result based on implemented logic. And, this logic needs to be verified before moving to production based on business requirements and data.

- Test Data Quality to ensure accuracy for application processing

- Test Data Generation with industry-leading tools to generate data with functionality to apply logic.

- Data Storage works as a distributed file system for hosting applications and storing data similar to the production environment.

Test Environment

A test environment provides accurate feedback about the quality and behavior of the application under test. While it could be a replica of the production environment, it is one of the most crucial element to be confident about the testing results.

For Big data software testing, the test environment should have;

- Enough space for storage and processing of a large amount of data

- Cluster with distributed nodes and data

- Minimum CPU and memory utilization to keep performance high to test Big Data performance

Performance Testing

Big data applications are meant to process different varieties and volumes of data. It is expected that the maximum amount of data to be processed in a short period. Therefore, performance parameter plays a crucial role and help to define SLAs. It might not be the easiest task and hence one should have comprehensive insight into the essential considerations of performance testing and how to go about it.

Big Data Testing Challenges and Solutions

Here are some of the common challenges that big data testers might face while working with the vast data pool.

Data Growth Issues: The quantity of information being stored in large data centers and databases of companies is increasing rapidly. QA professionals must audit this ample data periodically to verify its relevancy and accuracy for the business. But testing it manually is no longer an option.

Solution: Automated test scripts play a vital role in big data testing strategy to detect any flaws in the process. Make sure to assign proficient software test engineers skilled in creating and executing automated tests for big data applications.

Real-time Scalability: Significant rise in workload volume can drastically impact database accessibility, networking, and processing capability.

Solution: Your data testing methodology should include the following testing approaches:

- Cluster Technique:

- Distribute immense amounts of data evenly to all nodes of a cluster.

- The large data sets are split into various chunks and stored in different nodes of a cluster.

- By replicating file chunks and storing them within different nodes, the app’s dependency is reduced.

- Data Partitioning:

- This approach is less complex and is simpler to execute. The testers can conduct parallelism at the CPU level through data partitioning.

Stretched Deadlines & Costs: If the testing procedure is not properly standardized for; 1) optimization 2) re-utilization of the test case sets, there is a possible delay with test cycle execution exceeding the intended time frame. This may lead to increased overall costs, maintenance issues, and delivery slippages.

Solution: Big Data testers should ensure that the test cycles are accelerated. This is possible by the adoption of proper infrastructure and using proper validation tools and data processing techniques.

Types of Big Data Testing

- ArchitectureTesting: This type of testing ensures that the processing of data is proper and meets the business requirements. And, if the architecture is improper then it might result in performance degradation due to which the processing of data may interrupt and loss of data may occur. Hence, architectural testing is vital to ensure the success of your Big Data project.

- Database Testing: As the name suggests, this testing typically involves the validation of data gathered from various databases. It verifies the data extracted from cloud sources or local databases that are correct and proper.

- Performance Testing: It is for checking the loading and processing speed to ensure the stable performance of big data applications. This testing type helps check the velocity of the data coming from various Databases and Data warehouses as an Output known as IOPS (Input Output Per Second). Further, it validates the core big data application functionality under load by running different test scenarios.

- Functional Testing: Big data applications encompassing operational and analytical parts involve thorough functional testing at the API level. It includes tests for all the sub-components, scripts, programs, & tools used for storing or loading and processing applications.

Big Data Testing Tools

QA team can avail the most of big data validation only when the strong tools are in place.

Hadoop Distribution File System (HDFS): Apache’s Hadoop testing tool – HDFS is a distributed file system that maanges large data sets.

- Designed to scale up from one server to thousands, each offering local computation and storage.

- Is one of the major components of Apache Hadoop, the others being MapReduce and YARN.

HPCC: High-Performance Computing Cluster is a collection of various servers (computer) referred to as nodes. It is an open-source, data-intensive computing platform.

- The architecture provides high performance in testing by supporting parallel architecture for system, data & pipeline.

Cloudera: Popularly known as CDH (Cloudera Distribution for Hadoop), is built specifically to offer integrations for Hadoop with more than a dozen other critical open source projects.

- Meets enterprise-level deployment demands of technology

- Offers free platform distribution that includes Apache Hadoop, Apache Impala, Apache Spark

- Provides enhanced security and governance

- Enables organizations to collect, manage, control, and distribute an enormous amount of data.

Cassandra: A free, open-source NoSQL distributed database designed to handle large amounts of data across various commodity servers.

- Provides high scalability and availability with no single point of failure.

Big Data Testing Use Cases

Use Case 1

Big Data Testing Reduced Processing Time by 20% for the Multi-Node Data Architecture solution

Business Need: A Europe-based out-of-home & digital advertising customer wanted to improvise its advertising booking process by minimizing the booking time for its various inventory.

Solution: Our proficient big data team analyzed the legacy system architecture to develop the multi-node Big Data architecture solution. The solution offered RDD (Resilient Data Distribution) to seamlessly manage millions of ad booking requests per hour. Our dedicated big data QA engineers conducted thorough performance testing to improve the booking data processing time and handle millions of booking requests received.

Results achieved:

- 20% improvement in the data processing

- Improved profitability by 40%

- Scalable solution architecture

- 100% accurate reporting

Use Case 2

Big Data Testing Boosts Accuracy of Global Sales Calculation & ease Talend data migration

Business Need: The primary objective of the customer was to get precise sales reporting & revenue generation as per their defined sales KPI’s. This generated information was also used to calculate sales commission & compensation for the financial year.

Solution: Rishabh Software’s big data team architected the Talend data integration solution by integrating Talend with the existing systems. The approach was divided into several blocks to simplify functionality and combine the various outputs of the blocks. A team of 15+ big data engineers & QA testers supported the customers to validate & check every aspect of sales commission & compensation to create accurate modules for generating sales reports & help revenue generation as per the sales KPI’s.

Results achieved:

- Simplified process for revenue calculation

- Improved performance and accurate revenue and sales figures

- Less manual intervention

Concluding Remarks

Performing comprehensive testing on big data requires extensive & proficient knowledge to achieve robust results and within the defined timeline & budget. With a dedicated team of QA experts, you can experience the best practices for testing big data applications.

As a matured software testing company, we provide an end-to-end methodology that addresses all the Big Data testing requirements. With the right skillset and unmatched techniques, we cater to the latest needs and offer big data development services for new-age applications. With pre-defined built test data sets, test cases, and automation frameworks, our team ensures rapid QA process deployment which in turn reduces time to market.