In today’s data-powered business landscape, efficient data pipelines form the backbone of successful operations and processes. These data pipelines are known for making a seamless flow of high-quality data, empowering businesses to make data-driven decisions, training machine learning & deep learning models, generating multi-purpose reports, and driving valuable insights. However, the growing complexity of managing large volumes of data, combined with the variety and velocity of data sources, often creates challenges for businesses in configuring, deploying, and maintaining these pipelines.

Following data pipeline best practices is essential as these handle both batch and streaming data, routing it to various destinations like machine learning models. In production, they must manage performance, fault detection, and data integrity to prevent issues like corruption and duplication as they scale. From creation to management and maintenance, the entire process requires significant time, effort, and expertise, along with a deep understanding of best practices for data pipelines.

While our previous blog explored how to build a data pipeline, here we shall focus on best practices for effectively managing and maintaining data pipelines to optimize performance and ensure reliability.

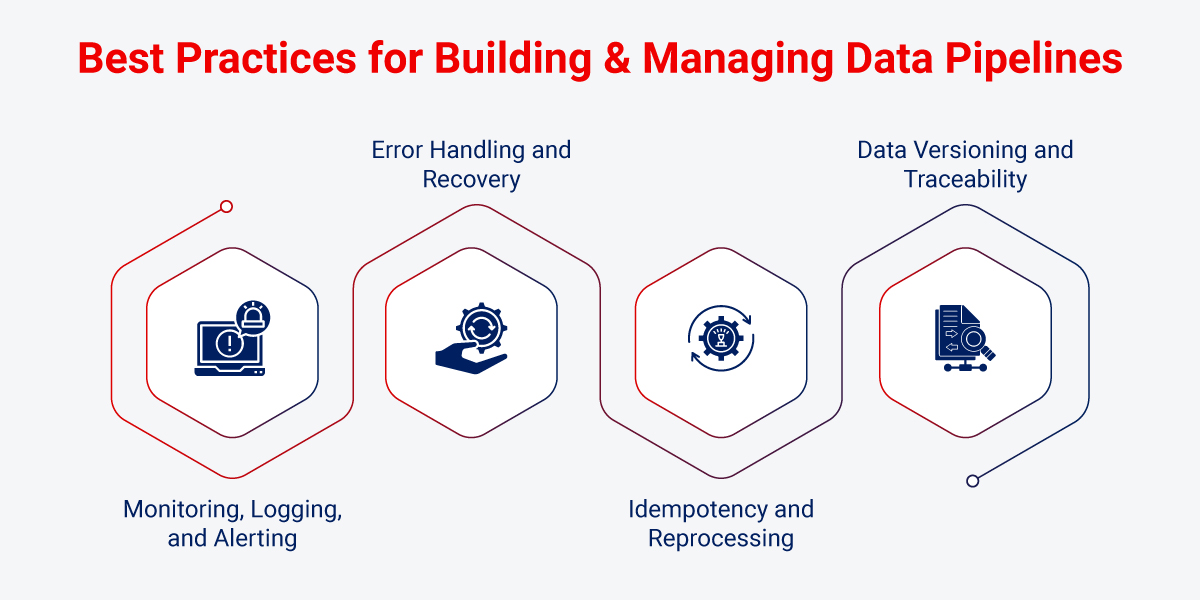

Data Pipeline Best Practices on Operational Aspect

Building an optimal data pipeline with best practices helps businesses seamlessly process structured and unstructured data, perform various data processing approaches, eliminate performance issues, speed up processing, and enhance scalability. Here are the best practices for building a data pipeline, which also help you maintain the pipeline for the long run.

Monitoring, Logging, and Alerting

A robust monitoring solution is essential for maintaining the efficiency and reliability of data pipelines. It begins with component-level monitoring using tools like Prometheus, Grafana, or CloudWatch, providing visibility into various system aspects. Centralized logging and alerting streamline failure notifications and make debugging faster and more efficient. Instrumentation generates logs, while collection and storage systems gather logs and metrics from multiple sources, such as through API integration or log trawling.

Automated alerting can trigger actions, like auto-scaling, in response to issues such as data quality problems or pipeline failures. Tracking key performance metrics, including error rates, processing times, and resource utilization, helps ensure the system is running smoothly. Also, prioritize data visualization tools to create a 360-degree dashboard, offering a comprehensive view of all critical pipeline data. Furthermore, hyper-scalable, ML-based monitoring tools from data observability solutions provide deeper insights and enhanced scalability for the pipeline.

Error Handling and Recovery

To ensure that data pipelines operate reliably, it is crucial to address errors and prevent breakages that can significantly impact the entire ETL and ELT processes.

Delays in operations and data loss are some common issues that arise in data pipelines. Therefore, it is essential to efficiently detect, process, and handle errors during pipeline execution. This proactive approach contributes to building and maintaining a robust data pipeline.

As data pipelines grow, they face multiple potential risks of failure and breakage. Therefore, it is important to design them to handle unexpected situations effectively, such as mentioned below:

Technical Issues

- Hardware Failures: Issues with physical components can disrupt processing.

- Software Glitches: Bugs or errors in the code can lead to unexpected behavior.

- Infrastructure Issues: Network failures or outages can hinder data flow.

Data-Related Issues

- Missing Data: Gaps in data can affect overall accuracy.

- Format Errors: Incorrectly formatted data can lead to processing failures.

To effectively manage these challenges, implement detailed, structured logging and centralized monitoring using tools like ELK Stack, Prometheus, or CloudWatch. This approach provides comprehensive visibility into your data pipeline’s operations.

This allows for real-time alerting and error diagnosis. Additionally, integrating failover mechanisms, implementing partial processing capabilities, and categorizing errors (as critical or. non-critical) allows for controlled degradation and efficient recovery.

Idempotency and Reprocessing

When building a data pipeline, designing for idempotency is a crucial best practice. An idempotent data pipeline can safely repeat operations without changing the final result. This approach enhances the pipeline’s reliability, especially when dealing with failures or retries, thus enhancing the resilience of the pipeline. For instance, if a network failure triggers multiple processing attempts of the same transaction, Idempotency ensures the operation is executed only once, maintaining data integrity and preventing inconsistencies.

Key Features of an Idempotent Pipeline

- Data Source Integration: The pipeline pulls data from one or more sources, ensuring that repeated operations yield consistent results.

- Transformation: It performs necessary transformations on the data while maintaining data integrity and reliability during failures or retries.

- Loading: The data is loaded into a data warehouse consistently, preserving the state of the dataset in the warehouse.

Reasons for Reprocessing

- Error Correction: Rectifying errors or inconsistencies in processed data ensures accuracy.

- Data Updates: Incorporating changes or updates to existing data keeps the dataset relevant.

- Data Backfills: Processing historical data that was previously excluded ensures completeness.

- Incremental Updates: Handling new or updated data from a source system allows the pipeline to remain current.

By implementing idempotency in your data pipeline design, you can significantly improve its robustness and reliability. This approach enables the pipeline to handle failures and ensure accurate data processing.

Data Versioning and Traceability

Well-structured data pipeline best practices include components like Data versioning and traceability. Data versioning ensures that multiple versions of data are maintained over time, enabling quick recovery in cases of data corruption or accidental deletion.

This approach not only supports business continuity but also aids in compliance and regulation by tracking data changes. Data versioning further, plays a key in effective testing. It also allows for providing outcomes based on different data versions, helping maintain the reliability of test results. and focus on maintaining the results’ reliability.

At the same time, businesses can leverage data traceability to preserve the integrity of data throughout its entire lifecycle and various stages. From ensuring data quality by pinpointing the root cause if any issue arises, data traceability serves multiple purposes. It helps businesses in achieving data privacy and governance compliance by keeping transparency throughout the data lifecycle.

Adopting practices like data lineage for keeping an eye on data flow from various sources, meta management to gather information in the context of data sources and transformation, and logging and auditing to trace data-related activities, including user action, system events, etc.

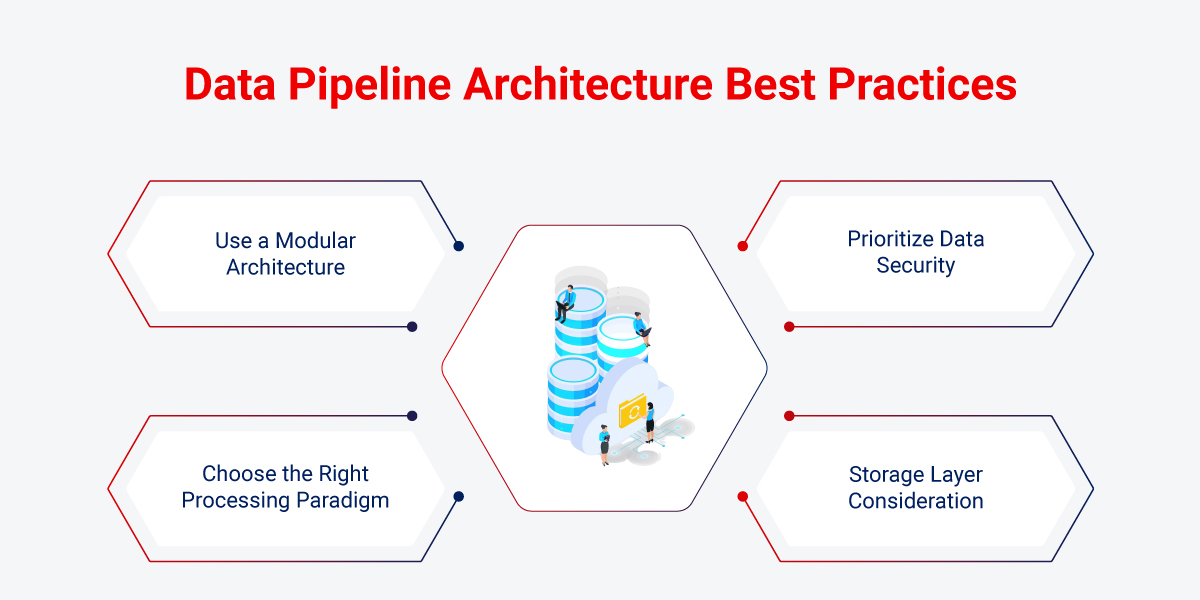

Data Pipeline Architecture Best Practices

Effective data pipeline architecture addresses the five key challenges of big data: volume, velocity, variety, veracity, and value (the 5 V’s). By implementing the right tools, technologies, and platforms, businesses can ensure efficient and uninterrupted data flow. Here are the best practices for data pipeline architecture:

Use a Modular Architecture

Embracing modular architecture enables all components to perform their best independently or break them down into smaller pipelines. Thus, each microservices will be responsible for a specific task, which leads to scalability, maintainability, and better performance.

Your organization can rely on containerization technologies like Docker, which ensure consistency across execution and various stages of the pipeline. Orchestration tools such as Kubernetes or Apache Mesos can be adopted to efficiently manage and operate microservices across clusters, making better utilization of varied resources and providing flexibility for scaling operations.

Choose the Right Processing Paradigm

If, as a business, your focus is more on data pipeline performance optimization, it can be challenging to explore and make decisive choices when selecting a processing paradigm. There are multiple paradigms available, including:

- Batch processing

- Stream processing

- Micro-batch processing

- Event-driven processing

- Hybrid processing

Businesses can consider batch processing, which works regardless of real-time updates for handling large datasets. Tools like Apache Spark or Hadoop are sufficient for facilitating efficient data handling and processing. Stream processing requires real-time analysis, whereas businesses dealing with diverse data types and varying processing requirements can adopt a hybrid approach, which combines both batch and stream processing to tailor their data handling to specific business needs. Overall, this paradigm helps improve data pipeline performance, address scalability challenges, empower better decision-making approaches, and more.

Prioritize Data Security

One of the best practices for building a data pipeline is to maintain security at every stage, throughout every discussion and decision. Implementing encryption methods, especially for when data is at rest and in transit, helps create a layer of protection around sensitive data. Role-based access control can be integrated to ensure the system grants permissions based on user roles. There is also a technique called data masking that can be utilized to obfuscate sensitive information. Moreover, conducting regular security audits allows businesses to measure compliance with industry regulations.

Storage Layer Consideration

While designing a data pipeline, the choice of storage layer is one of the key data pipeline best practices. For managing large, structured datasets and data lakes, cloud storage platforms like Amazon S3 or Google Cloud Storage offer more flexibility and scalability. Structured analytical workloads can benefit from the development of data warehouse solutions that are optimized for query performance:

- For performance, choose in-memory databases for low latency and distributed databases for high throughput.

- For high-velocity data, opt for NoSQL databases or stream processing platforms.

- For large datasets, use distributed storage systems like HDFS or Amazon S3.

Challenges in Building Data Pipelines and Their Solutions

Building effective data pipelines integrated with data analytics presents various complexities and challenges. Here are some major challenges commonly encountered by businesses, along with solutions for creating scalable and efficient data pipelines.

Data Quality Assurance

Maintaining consistent data quality across various sources is one of the biggest hurdles in building and managing data pipelines. Issues such as incompleteness, inaccuracy, and inconsistency lead to misleading or unreliable analytics.

Solution: Implement various techniques for robust data validation and cleansing processes, integrate data profiling tools and algorithms for anomaly detection

Complexity in Data Integrating

Data pipelines involve integrating data flow from diverse data sources, which can include both structured and unstructured data, making integration complex.

Solution: Select a flexible and scalable integration approach from the beginning. Choose effective data integration platforms and use APIs and middleware solutions to streamline data connectivity.

Efficient Data Management

Handling data, especially in circumstances of changes in sources, data flow, structure, and processing, is a significant challenge for any business.

Solution: Use schema evolution strategies, manage data versioning, and design pipelines that are flexible and configuration-driven.

Partner with Rishabh Software to Unlock Actionable Insights from Streamlined Data Pipelines

We are your trusted data engineering company, helping businesses become more data-driven. Our team designs and builds high-performance data pipelines, adhering to industry best practices for optimal efficiency and reliability. We can leverage the transformative power of data and Artificial Intelligence to empower your businesses to unlock meaningful insights at scale with a robust data foundation, modernization, and platform management. From discovery to assessment, covering the full cycle of data management from acquisition, cleansing, conversion, data interpretation, and deduplication. This comprehensive approach ensures data handling at every stage. We optimize data infrastructure, implement real-time streaming solutions, and enhance data visualization to deliver efficient processing and high-quality data analytics.

Our data engineers team empowers businesses of all sizes to leverage their data smartly and in an efficient manner to drive informed decision-making and reach new levels of organizational excellence.

Frequently Asked Questions

Q: What techniques can optimize data flow in a pipeline?

A: Techniques such as data partitioning, parallel processing, caching, and compression can be adopted to enhance the efficiency of data flow within pipelines.

Q: What is data pipeline architecture?

A: Data pipeline architecture refers to a design framework that includes the process of how data moves from sources to destinations, which can be implemented using various tools, technologies, and platforms.

Q: What is data pipeline automation?

A: Data pipeline automation involves utilizing the latest tools and scripts that provide the capability to automate the process of data extraction, transformation, and loading (ETL/ELT).

Q: What is an example of data pipeline architecture?

A: A typical example is an ETL pipeline using Apache Kafka for real-time streaming, combined with a data lake like Amazon S3 for storage and data warehouse for analytics.